It's all about the Process

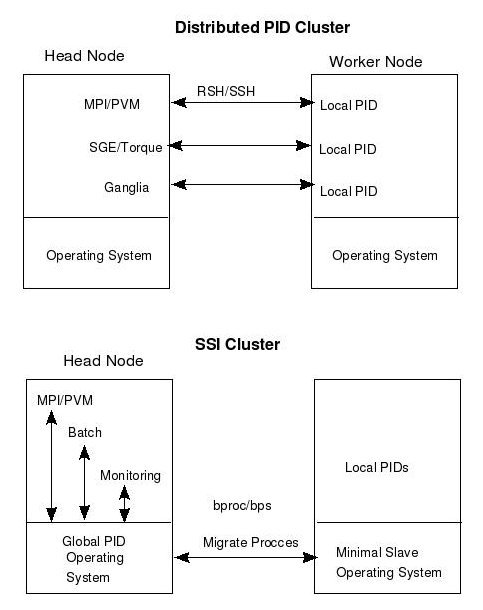

There are two predominant software approaches for to Linux clustering. The first is the "Classic Beowulf" where each system has a copy of a complete operating system (more or less). In this approach parallel processes are started remotely on worker nodes. The second method is based on a single system image (SSI) concept where worker nodes have a minimal amount of software installed and processes are migrated from the host node. There is a big difference.

Although these methods sound the same, there is a very subtle difference between them. The difference is how processes are handled on remote nodes. Recall that on any *INX box there is a identification number associated with every process (program). This number is often called a "PID" for process identification. In a cluster of independent machines, each node is in charge of it's own PIDs or PID space. If you have a cluster of 64 nodes, each node has its own independent PID space. When a parallel program is run on the cluster, 64 independent processes are started in 64 independent PID spaces. This method can create the following scenarios for administrator and end user.

Blind Head node - The head node is total blind to the PID space of the worker nodes and has no direct OS control over these PIDs. These processes do not show up in the head node process table and thus are absent in the top display on the head node. Only those processes that part of the parallel job and running on the a node are in the nodes process table. This situation requires requires some sort of PID management for the worker nodes. PID management includes things like starting and stopping processes, collecting process data, and managing permissions. Often this management is done using a combination of tools that include a batch scheduler like Sun Grid Engine or Torque and a process monitoring tool like Ganglia.

Spawning Overhead - The other issue facing distributed PID space is the overhead that may be needed to start processes. Virtually all remote processes are started using rsh or ssh protocols. Executing a parallel program on a large amount of nodes can incur long startup delays and also push the protocols beyond their designed use. For instance, rsh was not designed to spawn 512 jobs from a single host. These issues are usually addressed by starting remote daemons on worker nodes that handle the initialization one time and then allow for fast remote process execution. This method had been employed by LAM/MPI and PVM as part of their initial design. Recently MPICH has implemented the mpd (multi-purpose daemon) that provides similar capabilities to remote execution daemons. While the daemons solve some of the problems associated with global PID management, they are often only used for life of the parallel job. Therefore, spawning the daemons must be done before spawning jobs and thus falls back on the use of rsh/ssh to remotely start jobs.

Version Skew - Including a full OS distribution on each node (meaning it has a hard disk drive) tends to introduce further administration problems. First, you can assume if there is disk space, it will get used. Indeed, considering that "standard" IDE disk drives are now approaching 100 Gigabytes and that a fat Linux install may take 5-6 Gigabytes, clusters will have a large amount of "available storage" to temp users. This extra storage can be harnessed by things like PVFS, but users and administrators will be tempted to sprinkle scratch space throughout the cluster. In addition, keeping the distribution exactly the same on each node is difficult. Having to constantly re-image (sending 5 Gigabyte OS images to every node in the cluster every time there is change or upgrade) become cumbersome. And, just hope that a user did not have anything important stored in the scratch space.

A distributed PID space cluster certainly is a valid way to build a cluster, but it does add overhead to cluster/process management. Some of these issues, particularly version skew have been resolved recently by newer methods such as Warewulf (RAM Disk Nodes) or Onesis (NFS Based Nodes).

Take my PID, please

To address, the disadvantages of a distributed PID space, several single system image (SSI) technologies have been developed. In all SSI cases, the Linux kernel requires modification to support remote processes. Or more specifically, to make a remote process look like it is executing on the head node. The most notable of these is efforts are Bproc and openMosix. Each of these has a different design motivation, but the main method is to start a process on the head node and then migrate it to another node. A process stub or proxy process remains on the head node and communicates with the remote process in real time thus allowing true process control from the head node. Therefore, the remote process is listed in the host node process table and can be treated just like any other local process. Indeed, signals can be sent and received from the process in a normal fashion and the process will show up in top.The difference between Bproc and openMosix is how processes are migrated. With Bproc, the migration is usually "user directed" and with openMosix the processes are continuously and automatically migrated to the least loaded node. In terms of HPC, Bproc finds the most utility because applications prefer exclusive use of nodes and would consider excessive migration to degrade performance.

The Bproc mechanism is the heart of the Scyld Beowulf distribution where the entire cluster looks like a single system running on the head node. The use of Bproc also eliminates the need for a full Linux distribution image on the worker nodes. In contrast to a distributed PID space model where a Linux distribution of some form (either local or NFS) must be available on each node, the Scyld distribution only requires a kernel and shared libraries (cached in memory) on the worker nodes. While the lack of a full Linux distribution is considered a drawback for some, the Bproc approach provides the following:

- There is one version of the OS on the head node and no need to keep the nodes up to date.

- There is no need for user accounts on the remote nodes.

- There is no need for hard drives on nodes.

Summary

Depending on users needs, there does not seem to be a clear single "best way" to implement a software environment on every cluster. (As it should be because clusters are about choice) In reality, a cluster is pile of separate machines and managing the PIDs is a difficult task no matter how it is implemented. As a summary, Figure One shows some of the differences between the two approaches.From an administrators perspective the advantages and disadvantages are presented below. Keep in mind, that cluster software is under constant change and one persons advantage my be another's disadvantage.