It is what makes a cluster "a cluster"

An often asked question from both "clusters newbies" and experienced cluster users is, "what kind of interconnects are available?" The question is important for two reasons. First, the price of interconnects can range from as little as $32 per node to as much as $3,500 per node, yet the choice of an interconnect can have a huge impact on the performance of the codes and the scalability of the codes. And second, many users are not aware of all the possibilities. People new to clusters may not know of the interconnection options and, sometimes, experienced people choose an interconnect and become fixated on it, ignoring all of the alternatives. The interconnect is an important choice and ultimately the choice depends upon on your code, requirements, and budget.

In this review we provide an overview of the available technologies and conclude with two tables summarizing key features and pricing for various size clusters.

Introduction

This article will focus on interconnects that aren't tied to vendor specific node hardware, but can work in a variety of cluster nodes. While determining which interconnect to use is beyond the scope of this article, I can present what is available and make some basic comparisons. I'll present information that I have obtained from the vendors websites, from information people have posted to the beowulf mailing list, the vendors, and various other places. I won't make any judgments or conclusions about the various options because, simply, I can't. The choice of an interconnect depends on your situation and there is no universal solution. I also intend to stay "vendor neutral" but will make observations where appropriate. Finally, I have created a table that presents various performance aspects of the interconnects. There is also a table with list prices for 8 nodes, 24 nodes, and 128 nodes to give you an idea of costs.

I'm going to examine seven interconnects. These interconnects are:

| Sidebar One: Some Background and History |

|

This article is an update to an article that Doug and I wrote for ClusterWorld Magazine. The article appeared in April of 2005, but was written in Jan. 2005. Huge changes in interconnect technology, such as the rise of 10 GigE Ethernet and Infinipath appearing in production machines, warranted that I update the original article. Moreover, the original article had a table with some performance data and pricing data for various sized clusters (only interconnect pricing). As you all know, prices can and do change very rapidly, so I wanted to provide updated comparisons. All information was taken from published public information and discussion with vendors. We have tried to be as fair as possible when presenting these technologies. All the vendors who make high performance interconnect hardware were given a chance to review the article and provide feedback. We expect there maybe a need for further discussion as well. We invite those who wish to make comments to register and add a comment at the end of the article. If anyone including vendors has the need to write an longer article about their experiences with a particular interconnect technology, we welcome your input. Please contact the Head Monkey. Finally, we urge you to contact the vendors we mention and discuss your specific needs. This review is best used as a road map to help get you started in right direction. |

- Gigabit Ethernet

- Gigabit Ethernet with Level 5 NICs

- 10 Gigabit Ethernet

- Infiniband

- Infinipath

- Myrinet

- QsNet (Quadrics)

- SCI (Dolphin)

In addition, I'll talk about some of the technologies that some of the interconnects use to improve performance. Namely, TCP bypass (OS bypass), TCP Off-load Engine (TOE), RDMA (Remote Direct Memory Access), zero-copy networking, and interrupt mitigation. I'll also discuss some alternatives to plain Gigabit Ethernet such as

Gigabit Ethernet

Ethernet has an enduring history. It was developed principally by Bob Metcalfe and David Boggs at Xerox Palo Alto Research Center in 1973 as a new bus topology LAN (Local Area Network). It was designed to be a set of protocols at the datalink and physical layers. In 1976, carrier sensing was added. A 10 Mbps (Mega-bits per second) standard was promoted by DEC, IBM, and Xerox in the 1980's. Novell adopted it and used in their widely successful Netware. It finally became an IEEE standard in 1983 (802.3, 10Base5).

The initial speed of Ethernet was only 10 Mbps. As more systems were added to networks, a faster network connection was needed. Development of Fast Ethernet (802.3u), which runs at 100 Mbps or 10 times faster than 10 Mbps, was started in 1992 and was adopted as an IEEE standard in 1995. It is really just the same 10 Mbps standard but run at a higher speed.

With the explosion of the Internet and with the need to move larger and larger amounts of data, even Fast Ethernet was not going to be fast enough. The development of Gigabit Ethernet (GigE), which runs as 1 Gbps (Giga-bits per second) or 10 times faster than Fast Ethernet, was started and became an IEEE standard (802.3z) that was adopted in 1998. GigE uses the same protocol as 10 Mbps Ethernet and Fast Ethernet. This makes it easy to upgrade networks without having to upgrade every piece of software. Plus GigE can use the same category 5 copper cables that are used in Fast Ethernet (If you are buying new cables for GigE choose cat 5e or better).

Currently, there are a large number of manufacturers of GigE Network Interface Cards (NIC) on the market. Most of the GigE NICs are supported at some level in the Linux Kernel. Many of NIC manufacturers use the same basic GigE chipsets (e.g. Intel, Broadcom, Realtek, National Semiconductor), sometimes slightly modifying how they are implemented. This makes it difficult to write "open" drivers without vendor support. There are some good articles available on the web that describe the lack of vendor support for GigE NICs, especially Nvidia (although Nvidia has some closed drivers that allow for the use of the Nvidia audio and network devices). In recent years, most major vendors have released source code drivers for their GigE chipsets or at least provided documentation on the chipsets. The Linux kernel has driver support for most common types of GigE chipsets. There are also Fast Ethernet drivers, original 10 Mbps Ethernet drivers, and fairly recently, 10 Gigabit Ethernet (10 GigE) drivers.

Many of these Ethernet drivers have parameters that can be adjusted. Later in this article I will mention some of the things that can be adjusted. You can adjust the various parameters for any number of reasons and you find that cluster users often experiment with drivers to determine which is the best combination for their cluster and work load.

One of the problems with GigE is that it is based on the standard Ethernet MTU (Maximum Transmission Unit) size of 1500 Bytes. As each Ethernet packet must be serviced by the processor (as an interrupt) moving data at Gigabit speeds can tax even the fastest systems (80,000 interrupts a second). One of the ways to solve this problem is the use of "Jumbo Frames" or basically a larger MTU. Most GigE NICs support MTU sizes up to 9000 Bytes (reducing the number of interrupts by up to a factor of 6). If you want to use Jumbo Frames, make sure your switch supports these sizes (see the subsection on GigE switches entitled "Switching the Ether").

GigE NICs come in various sizes and flavors over a range of prices. Sixty-four-bit PCI "server" GigE NICs are still slightly expensive ("desktop" NICS, which are essentially built from the same components, are very inexpensive, but are limited by the 32-bit PCI bus). For server class motherboards as well as normal desktop motherboards, you will find GigE built into the motherboard at a lower cost. If your motherboard doesn't have built-in GigE, then regular 32-bit PCI NICs start at about $3-$4 with decent ones, such as the DLINK DFE_530TX starting at about $13 or the popular Intel PCI GigE NIC starting at about $30. Sixty-four-bit GigE NICS start at about $33 (when this article was written), but PCI-X NICS are more expensive. PCI-X GigE NICs with copper connectors start at about $100 and PCI-X GigE NICS with with Fiber Optic connectors start at about $260. (Note: These prices were determined at the time the article was posted. They will vary.) Also, be aware that the really low cost NICs may not perform as expected. Some basic testing will help qualify NICs.

GigE is a switched network. A typical GigE network consists of a number of nodes connected to a switch or set of switches. You can also connect the switches to each other to create a tiered network. Other network topologies such as rings, 2D and 3D torus, meshes, etc. can also be constructed without switches. However, some of these networks may require multiple NICs per node and can be complicated to configure, but they do eliminate the need for switches.

While it is beyond the scope of this article, you can also use new network topologies such as Flat Neighborhood Networks (FNNs) or Sparse Flat Neighborhood Networks (SFNNS) that offer potential benefits for particular applications. There are on-line tools to help you design FNNs. As shown in some of the literature, these types of networks can offer very good price/performance ratios for certain codes.

Pushing GigE

Despite the rise in Ethernet speeds, it is possible for a single 32-bit PCI based NIC to saturate the entire PCI bus. Consequently, it's easy to see that the CPU can spend a big part of it's time handling interrupt requests and processing network traffic.

The situation has led vendors to develop TOE (TCP Off-Load Engines) and RDMA (Remote Direct Memory Access) NICs for GigE. The goal of these cards is to reduce latency and to reduce the load on the CPU when handling network data. Several companies started marketing these type of NICs, but really only one remains, Level 5 Networks and they don't use TOE or RDMA.

Level 5 Networks makes a 32/64 bit PCI GigE NIC that is not a RDMA NIC but uses a new technology called Etherfabric. In a conventional network, a user application converts the sockets or MPI calls into system calls that use the kernel TCP/IP stack. With Etherfabric, each application or thread gets its own user space TCP/IP stack. The processed data is sent directly to the virtual hardware interface that was assigned to the stack and then sent out over the NIC. This process eliminates context switching (mentioned below) to improve latency.

The interesting thing about the Level 5 NIC and Etherfabric, is that you can use existing TCP/IP networks and any routers because it doesn't use a different packet protocol. In addition, the NIC appears as a conventional GigE NIC so it can send and receive conventional traffic as well as Etherfabric traffic. It also means that you don't have to use an Etherfabric specific MPI implementation. Consequently, you don't have to recompile and can use your binaries immediately. This overcomes an Achilles heal of past high-speed GigE NICs because you can use all of your ISV applications.

GAMMA or Genoa Active Message MAchine is a project maintained by Giuseppe Ciaccio of Dipartimento di Informatica s Scienze dell'Informazione in Italy. It is a project to develop an Ethernet kernel bypass capability for existing Ethernet NICs as well as it's own packet protocol (doesn't use TCP packets). Since it bypasses the Linux kernel and doesn't use TCP packets it has a much lower latency than standard Ethernet.

Right now, GAMMA only supports the Intel GigE NIC and the Linux 2.6.12 kernel (Broadcom chipsets (tg3) are said to be under development). Also, since it uses something other than IP the packets are not routable over a WAN (not likely to be a problem for clusters, but could be a problem for Grids). GAMMA can share TCP traffic over the interfaces, but it takes exclusive control of the NIC when transmitting or sending data. That is, all TCP/IP traffic is stopped. Normally this is not a problem as most clusters have multiple networks available to them.

There is also a port of MPICH1 to run using GAMMA. MPI/GAMMA is based on MPICH 1.1.2 and supports SPMD/MIMD parallel processing and is compatible with standard network protocols and services.

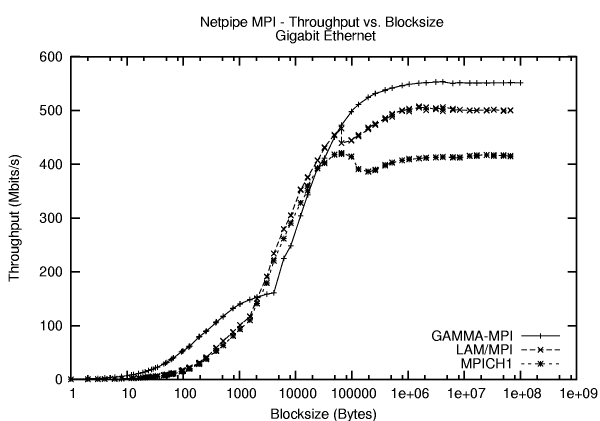

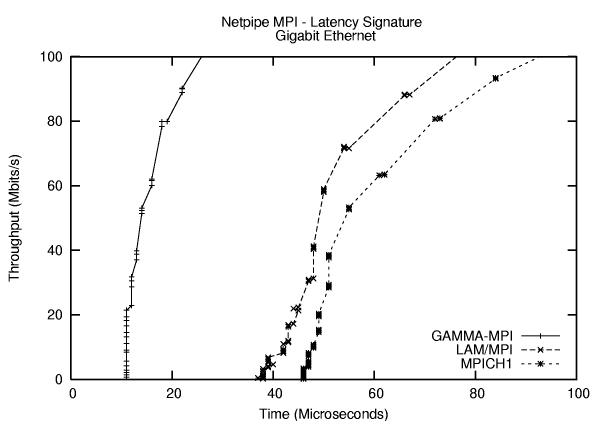

Figure One is a plot of the throughput of MPI/GAMMA compared to LAM/MPI and MPICH1. Figure Two is a comparison of the latencies for the same three MPI's (Intel Pro/1000 MT, 32 bit PCI).

Notice that the latency is only 9.54 microseconds and this includes the latency of the switch! This is probably the lowest latency I've ever seen for GigE. Also, the throughput for MPI/GAMMA is very good as well, peaking at about 72 MByte/s or 560 Mbits/s Not too shabby for an inexpensive Intel 32 bit PCI GigE card.

In addition to GAMMA, there are other network solutions that don't use TCP. For example Scali MPI Connect has a module called "Direct Ethernet" or DET that runs over any communication interface that has a MAC address. In other words, it uses a different protocol than TCP and by-passes the kernel (sometimes called TCP-bypass). Moreover, Scali says that using DET allows you to aggregate multiple GigE networks together. Scali says that you can use independent inexpensive unmanaged layer 2 switches for this aggregation.

Another example of an MPI implementation that doesn't use TCP as the packet protocol is Parastation from Partec. It currently is up to Version 4 and claims an MPI latency of about 11 microseconds. It is based on MPICH 1.2.5.

I Feel the Need for Speed

I have been using various terms such as TCP-bypass, without adequately explaining them. I want to take some time to explain some of the techniques that have been used to improve the performance of interconnects. In particular, I'm going to cover:

- TCP-Bypass

- TCP Offload Engine

- Kernel Bypass (OS Bypass)

- RDMA

- Zero-copy Networking

- Interrupt Mitigation

TCP Bypass

I previously mentioned TCP-Bypass when I discussed Scali MPI Connect. TCP-bypass really means that you are using a different packet protocol than TCP or UDP. The application uses it's own packet definition, builds the packets on the sending side and sends them directly over the link layer where they are decoded and the data processed. There are a number of products that do this, such as, Scali MPI Connect, Parastation, HyperSCSI (iSCSI like storage), and Coraid ATA-over-Ethernet. The term "TCP-bypass" is used because you are "bypassing" the TCP packet protocol and using a different protocol right on top of the link layer.The idea behind using a different protocol is that you reduce the overhead of having to process TCP packets. Theoretically this allows you to process data packets faster (less overhead) and fit more data into a given packet size (better data bandwidth). So you can increase the bandwidth and reduce the latency. However, there are some gotchas with TCP-bypass. The code has to perform all of checks that the TCP protocol performs, particularly the retransmission of dropped packets. In addition, because the packets are not TCP, they are not routable across Ethernet. So you can't route these packets to other networks. This is not likely to be a problem for clusters that reside on a single network, but Grid applications will very likely have this problem because they need to route packets to remote systems.

TCP Offload Engine

TCP Offload Engines (TOE) are a somewhat controversial concept in the Linux world. Normally, the CPU processes the TCP packets, which can require extreme processing power. For example, it has been reported that a single GigE connection to a node can saturate a single 2.4 GHz Pentium IV processor. The problem of processing TCP packets is worse for small packets. To process the packets, the CPU has to be interrupted from what it's doing to process the packet. Consequently, if there are a number of small packets, the CPU can end up processing just the packets and not doing any computing.

The TOE was developed to remove the TCP packet processing from the CPU and put it on a dedicated processor. In most cases, the TOE is put on a NIC. It handles the TCP packet processing and then passes the data to the kernel, most likely over the PCI bus. For small data packets, the PCI bus is not very efficient. Consequently, the TOE can collect from a series of small packets and then send a larger combined packet across the PCI bus. This design increases latency, but may reduce the impact on the node processing. This feature is more likely to be appropriate for enterprise computing than HPC.

Kernel Bypass

Kernel Bypass, also called OS bypass, is a concept to improve the network performance, by going "around" the kernel or OS. Hence the term, "bypass." In a typical system, the kernel decodes the network packet, most likely TCP, and passes the data from the kernel space to user space by copying it. This process means the user space process context data must be saved and the kernel context data must be loaded. This step of saving the user process information and then loading the kernel process information is known as a context switch. According to this article, application context switching constitutes about 40% of the network overhead. So, it would seem that to improve bandwidth and latency of an interconnect, it would be good to eliminate the context switching.

In Kernel bypass, the user space applications communicate with the I/O library that has been modified to communicate directly with the user space application. This process takes the kernel out of the path of communication between the user space process and the I/O subsystem that handles the network communication. This change eliminates the context switching and potentially the copy from the kernel space to user space (it depends upon how the I/O library is designed). However, people are arguing that the overhead in the kernel associated with a context switch has shrunk. Combined with faster processors the impact of a context switch has lessened.

RDMA

Remote Direct Memory Access (RDMA) is a concept that allows NICs to place data directly into the memory of another system. The NICs have to be RMDA enabled on both the send and receive ends of the communication. RDMA is useful for clusters because it allows the CPU to continue to compute while the RDMA enabled NICs are passing data. This can help improve compute/communication overlap, which helps improve code scalability.

The process begins with the sending RDMA NIC establishes a connection with the receiving RDMA NIC. Then the data is transferred from the sending NIC to the receiving NIC. The receiving NIC then copies the data directly to the application memory bypassing the data buffers in the OS. RDMA is most commonly used in Infiniband implementations, but other high-speed interconnects use it as well. Recently 10 GigE NICs started using RDMA for TCP traffic to improve the performance. There has been some discussions lately that RDMA may have outlived it's usefulness for MPI codes. The argument is that most messages in HPC codes are small to medium in size and that using memory copies to move the data from kernel space to user space is faster than having having a RDMA NIC to it. Reducing the amount of time the kernel takes to do the copy and improving processors speeds are two of the reasons that a memory copy could be faster than RDMA.

There is a RDMA Consortium that helps organize and promote RMDA efforts. They develop specifications and standards for RDMA implementations so the various NICs can communicate with each other. Their recent efforts have resulted in the development of an RDMA set of specifications for TCP/IP over Ethernet.

Zero-Copy Networking

Zero-Copy networking is a technique where the CPU does not perform the data copy from the kernel space to user space (the application memory). This trick can be done for both send operations and receive operations. This can be accomplished in a number of ways including using DMA (Direct Memory Access) copying or memory mapping using a MMU (Memory Management Unit) equipped system. Zero-copy networking has been in the Linux kernel for some time, since the 2.4 series. Here is an article that discusses how the developers went about accomplishing it. The article gives some details at a high level about how one accomplishes this. It also points out that zero-copy networking requires extra memory and a fast system to perform the operations. If you would like more information, this article can give you even more detail.

With every new idea there are always seems to differing opinions. Here is an argument that zero-copy may not be worth the trouble. Rather the authors argue that a Network Processor (kind of a programmable, intelligent NIC) would be a better idea.

Interrupt Mitigation

Interrupt Mitigation also called Interrupt Coalescence, is a another trick to reduce the load on the CPU resulting from interrupts to process packets. As mentioned earlier, every time a packet gets sent to the NIC, the kernel must be interrupted to at least look at the packet header to determine if the data is destined for that NIC, and if it is, process the data. Consequently, it is very simple to create a Denial-of-Service (DOS) attack by flooding the network with a huge number of packets forcing the CPU to process everyone of them. Interrupt Mitigation is a driver level implementation that collects packets for a certain amount of time or a certain total size, and then interrupts the CPU for processing. The idea is that this reduces the overall load on the CPU and allows it to at least do some computational work rather than just decode network packets. However, this can increase latency by holding data before allowing the CPU to process it.

Interrupt Coalescence has been implemented in Linux through NAPI (New API) rewrites of the network drivers. The rewrites include interrupt limiting capabilities on both the receive side (Rx) as well as the transmit side (Tx). Fortunately, the people who wrote the drivers allow the various parameters to be adjusted. For example, in this article, Doug Eadline (Head Monkey) experimented with various interrupt throttling options (interrupt mitigation). Using the stock settings with the driver the latency was 64 microseconds. After turning off interrupt throttling, the latency was reduced to 29 microseconds. Of course, we assume the CPU load was higher, but we didn't measure that.

There are two good articles that discuss "tweaking" GigE, TCP NIC drivers. The first describes some parameters and what they do for the drivers. The second describes the same thing but with more of a cluster focus. Both are useful for helping you understand what to tweak and why, and what the impacts are.