Assume Nothing, Test Everything

In a previous article, we learned how to test an interface with Netpipe. In terms of clusters, Netpipe can be considered a micro-benchmark as it only tests a single (but important) component of the cluster. Can we conclude that good Netpipe performance means we have a good cluster? Well, it depends, maybe we can and then again more testing may be needed. Let's consider the fact that Netpipe performance tells us about the maximum limits of TCP/IP performance between two nodes. When we run parallel applications, there is usually more involved than just raw TCP/IP performance. There is usually an MPI layer between your application and the TCP/IP layer. In addition, there are effects due to compilers and node hardware (i.e. dual vs. single) and even the application itself may stress the interconnect in way not measured by Netpipe. Tests that run over multiple comments are usually referred to as macro-benchmarks because they involve a "whole system test" vs a single component test. Both are valuable, but neither may tell the whole story, however.

Layer it On

As mentioned, a real applications generally uses MPI or PVM. Unless you are using a kernel bypass version of MPI (like that provided by many of the high performance interconnect vendors) your MPI is probably built on top of TCP/IP. So the next logical question would be, "How does this effect my interconnect performance?" At the micro-benchmark level, the answer only requires a few tests. Netpipe can be used to test MPI implementations as well as TCP/IP. See the sidebar on how to compile a Netpipe MPI version. Once compiled we can run Netpipe with a simple mpirun command. Background on using Netpipe can be found in here.In order to make the testing interesting, we can compare two open source MPI's and one commercial version. We will use MPICH, LAM/MPI, and MPI-PRO from MPI Software Technology. Please keep in mind, the results reported here are not intended to be an MPI evaluation or benchmark. Indeed, the MPI versions we are using are now "old" and we are using them to illustrate a point. It is, therefore, advisable, with the help of this column, to do your own tests on your hardware and with your application set. As we will see, simple assumptions based on single benchmarks may or may not be valid.

Using Netpipe we can measure the latency for TCP/IP and several MPI's. See the various sidebars for a description of the test environment and how to compile Netpipe for MPI. The table gives the average latency for three Netpipe runs. We can see that each MPI adds overhead to the raw TCP/IP latency and MPI/PRO seems to be more than double.

| MPI Version | Latency in μs |

|---|---|

| TCP | 25.3 |

| LAM/MPI | 32.3 |

| MPICH | 36.7 |

| MPI-PRO | 57.3 |

Table One: Netpipe MPI Latency (lower is better)

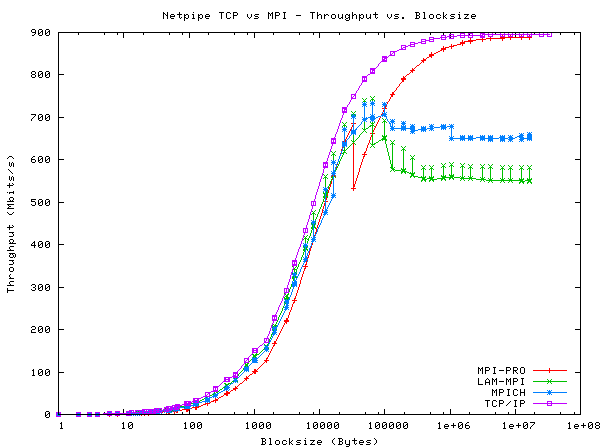

If we examine the throughput of each interface in Figure One, we see that each MPI performs less (in some case quite a bit less) than the raw TCP/IP performance. It should be mentioned, that some tuning of the various MPI versions may help with performance, however, Figure One clearly indicates how much MPI performance can vary from that of standard TCP/IP.

And the Winners Are ...

Although many discussions about cluster networking revolve around latency and throughput characteristics, it is hard to say which MPI performs best from just the Netpipe results. And as we will see, some assumptions about latency may not hold up in some of the macro-benchmarks.To get a better idea of how our interconnect performs with various MPI's, the NAS parallel benchmark suite was used. See the sidebar for more information on where to find information on compiling and running the suite. In general, the NAS suite is a good benchmark for measuring cluster performance because it provides a wide range of problems that represent typical CFD (Computational Fluid Dynamics) codes. The benchmarks are also self checking. In addition, the suite provides two extreme cases that measure network performance. At the one end, the program EP (Embarrassingly Parallel) is virtually latency insensitive as there is a large amount of computation and very little communication. Everything else being equal, EP should provide roughly the same result for each MPI and be more a measure of raw CPU performance. On the other end of the spectrum, is the IS (Integer Sort) program which requires a large amount of communication and relatively little computation. IS is therefore very sensitive to the latency of the interconnect.

The results of the NAS suite (Class A) run on four nodes (-np 4 option) are presented in Table Two. The results reported by the each program are Mop/s - mathematical operations per second, bigger is better). The last column indicates which MPI did best on each test. Again, these numbers should not be taken as proof that one MPI is necessarily better than the other. Indeed, many of the results are very close (EP should be similar in all cases) and no MPI tuning was done. The point of the table is that there is no clear winner and one surprise. Looking at the results for IS, we see that MPI-PRO provided the best results even though Netpipe tells us that it has the highest latency. So much for assumptions.

| Test | MPICH | LAM/MPI | MPI-PRO | Best |

|---|---|---|---|---|

| BT | 998 | 1000 | 1004 | MPI/PRO |

| CG | 677 | 767 | 644 | LAM |

| EP | 22 | 22 | 23 | MPI/PRO |

| FT | 608 | 724 | 716 | LAM |

| IS | 27 | 30 | 37 | MPI/PRO |

| LU | 1213 | 1237 | 1228 | LAM |

| MG | 861 | 875 | 858 | LAM |

| SP | 580 | 562 | 577 | MPICH |

Table Two: Mop/s Results for NAS Parallel Benchmark (bigger is better)

How Tall is a Building?

One might conclude that it is very difficult to say what provides the I

| Sidebar One: Compiling and Running Netpipe for MPI |

|

Running Netpipe using MPI is actually easier than using TCP/IP as MPI takes care of process spawning on both nodes. To compile the NPmpi program, you will need to edit the Makefile. The following are the Makefile changes that are needed for MPICH and LAM/MPI. Other MPI versions can be used by making similar changes.

Compiler:

# For MPICH CC = mpicc # For LAM CC = mpiccMPI Path # For MPICH, set to your MPICH location MPI_HOME = /usr/mpi/mpich # For LAM, set for your LAM location MPI_HOME = /usr/mpi/lam/ #Comment-out these lines #MPI_ARCH = IRIX #MPI_DEVICE = ch_p4 Libraries:

#For MPICH (uses libmpich.a)

NPmpi: NPmpi.o MPI.o

$(CC) $(CFLAGS) NPmpi.o MPI.o -o NPmpi -L $(MPI_HOME)/lib/$(MPI_ARCH)/$(MPI_DEVICE) \

-lmpich $(EXTRA_LIBS)

# For LA-MPI (uses libmpi.a)

NPmpi: NPmpi.o MPI.o

$(CC) $(CFLAGS) NPmpi.o MPI.o -o NPmpi -L $(MPI_HOME)/lib/$(MPI_ARCH)/$(MPI_DEVICE) \

-lmpi $(EXTRA_LIBS)

Running:

Results for plotting are saved to the file I

For LAM/MPI create a lamhost file with two machine names, start the LAM daemons (see this article for

information on running LAM) and run:

|

| Sidebar Two: Test Environment |

|

The test environment included the following hardware and software.

Note: The use of Netpipe for TCP/IP and gnuplot are described in a previous article. |

| Sidebar Four: Resources |

|

The NAS suite and NetPipe are also part of the Beowulf Performance Suite (BPS)

|

This article was originally published in ClusterWorld Magazine. It has been updated and formated for the web. If you want to read more about HPC clusters and Linux you may wish visit Linux Magazine.