I feel the need for PVFS speed

In a previous column I looked at the various ways to code for PVFS1 and PVFS2. However, I never really discussed how to architect a PVFS system for your application. A correct PVFS design can improve the I/O performance of your application, ease administrative burden, improve flexibility, and improve the fault-tolerance. In this column I'll examine how to enhance and tune performance.

When we discuss performance enhancements for PVFS, there are three issues to think about: the network to the I/O servers, the number of I/O servers, and the local disks in the I/O servers. For each of these issues there are a number of decisions to be made. Hopefully this column will start you on the road to making these decisions.

Networking to the I/O Servers

Recall that the storage nodes within PVFS are called I/O servers and the cluster nodes that utilize the storage space from the I/O servers are called clients. A critical item in PVFS is how the data is transmitted from the I/O servers to the client. A very nice feature of PVFS is that you can use the existing cluster network for PVFS traffic as well as computational traffic. This dual use can save you money because an additional network for PVFS is not needed. This scenario also works well because typically applications don't perform computations at the same time they perform I/O. Therefore, in most cases, you won't be taking away computational bandwidth to perform parallel I/O.Given the cost of GigE (Gigabit Ethernet) today, it is quite feasible to build an inexpensive cluster network with good bandwidth and good latency for computational traffic and PVFS traffic. Many motherboards already come with built-in GigE. Additionally, GigE NICs (Network Interface Cards) with excellent performance are available at a very low cost.

If you need better I/O performance from PVFS or more bandwidth or lower latency from your network, there are two things you can do. You can upgrade the existing single network or you can add an additional network dedicated for PVFS.

Upgrading from something like GigE to Myrinet, Infiniband, Quadrics, or SCI will give you more bandwidth and lower latency for your computational traffic. In addition, since you can run TCP traffic over these networks, you will be able to utilize PVFS. Switching to a high performance network will obviously cost more, however if your computational loads require such a network, then application I/O (PVFS) performance will also be increased.

If you have a large cluster with multiple jobs, the I/O and computation may contend for the interconnect. computation and I/O at different times. In some cases the I/O servers, which may be doing computational work at the time (if you have designated the compute nodes as I/O nodes as well), will then have to use some of their CPU time, network capacity, and disk I/O performance for the PVFS traffic. This situation could negatively impact the performance of your code.

If you want to reduce the impact of PVFS traffic on the performance of your codes you can either switch to a higher performance network as mentioned above, or you can move the PVFS traffic to it's own network. This design avoids network congestion from computational traffic and allows PVFS to use any and all of the available network capability.

The basic concept in adding a second network for PVFS is to put the I/O servers on a separate network that the compute nodes (also called the clients) can see as well.

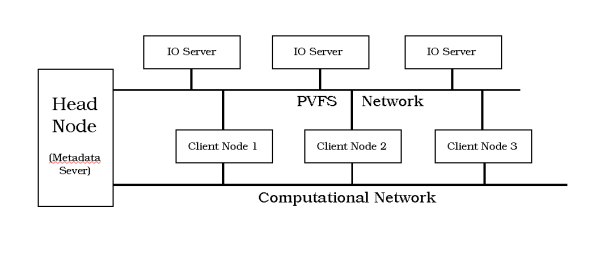

Figure One illustrates one option for configuring a cluster with separate I/O servers and clients and separate networks.

For either PVFS1 or PVFS2, I've chosen to have one head node that is also the single metadata server (PVFS2 can have multiple metadata servers). There are two separate networks that connect to the head node. One is the computational network for things like MPI traffic, and the other is for PVFS traffic. The computational nodes, also called the clients, can see both the computational network and the PVFS network. For simplification, it would be beneficial to put the I/O servers on a different subnet from the computational traffic.

The PVFS and PVFS2 websites have information on how to configure and start the metadata server (usually the head node on the cluster). This process varies by each version. You can also find information on starting the I/O servers as well.

The above configuration is just one of many. If you want the cluster nodes to be both I/O servers and clients, you can still use a separate network for PVFS traffic. The processors will be doing double duty to support the computations and the serve PVFS data, but this modification will reduce the burden on the computational network. Both configurations, separate I/O servers and clients and combined I/O servers and clients, have cost implications and performance implications which we will be considering next.

Number of I/O Servers

A common architectural question is how many I/O servers to use and whether to utilize all the spare space on the compute nodes for PVFS (i.e. use the compute nodes as I/O servers). Answering this question is very difficult due to the myriad of options available. However, there have been a few studies to try to provide some insight into this issue. Three of the major studies were by Kent Milfeld, et al at the Texas Advanced Computing Center (TACC), Jens Mache, et. al., at Lewis & Clark College, and Monica Kashyap, et al at Dell Computers.

In 2001, Dr. Mache and his associates used PVFS1 to get high performance disk access from a PC cluster using IDE disks. Their goal was to break the 1 GB/sec I/O barrier that ASCII Red had broken at a cost of $1 million. Dr. Mache used 32 AMD Athlon 1.2 GHz nodes connected with Gigabit Ethernet (GigE). Each node had two IDE disks that were configured with software RAID-0 (striping). They experimented with varying the number of client nodes and the number of I/O servers on a small 8 node system while running a ray tracing program to compute a number of frames of a simple scene. The best configuration consisted of 2 I/O servers and 6 clients. However, when all 8 nodes were made both clients and I/O servers, the overall completion time was 1.264 times better than the 2 I/O server/6 client configuration even considering that the nodes were computing as well as functioning as I/O servers.

Next, Dr. Mache and his team setup all 32 nodes as both clients and I/O servers. They then ran a code that was a variation of a read/write test program that comes with PVFS1. The code writes and then reads blocks of integer data to and from a PVFS1 file. Each node adds 96 MB (Megabytes) to the global file that has a total of (96*n) MB, where n is the number of nodes used. They found that after 25 overlapping nodes they achieved at least 1 GB/sec in read performance and after 29 nodes they achieved at least 1 GB/sec in write performance.

The cost comparison is even more interesting. ASCII Red spent about $1 million at the time to achieve 1 GB/sec I/O throughput. Dr. Mache spent about $7,200. They beat the I/O price/performance by over a factor of 100!

Kent Milfeld and his associates at TACC have examined PVFS1 performance in a cluster with a simple read/write code and a simulated workload code. The first study focused on 16 Intel PIII/1 GHz single CPU nodes connected with Fast Ethernet. They varied the number of nodes that were assigned as I/O servers with the remaining number of nodes assigned as clients with the sum of the two always 16. They found that 8 I/O servers and 8 clients gave the best performance for the simple read/write test code. They also found that the Fast Ethernet network handicapped the throughput of PVFS1.

A second system with 32 nodes of dual PIII/1 GHz connected with Myrinet 2000 was also tested. In these tests, they allowed one of the two CPUs to be used as a client and one to be used for an I/O server. They found that splitting the functions on a dual CPU system produced higher throughput than using dedicated nodes. The most likely reason is that a portion of the I/O was local to the nodes. They also found that an equal number of clients to I/O servers produced the best performance. This result is basically the same overlapped node configuration of Dr. Mache.

Monica Kashyap, et al at Dell Computers performed a similar study. They used 40 Dell 2650 nodes with dual 2.4 GHz Intel Xeon processors connected with Myrinet. Up to 24 nodes were used as compute nodes and up to 16 nodes as I/O servers. Each I/O server had five 33.6 GB SCSI drives. They used a test code from ROMIO, called perf, that performs concurrent read/write operations to the same file. They examined two type of write access, those without file synchronization and those with file synchronization (MPI_File_sync) and two types of read access, without file synchronization, and read access after file synchronization.

In general, they found without synchronization they could achieve very high levels of throughput for write operations. Interestingly, for a small number of I/O servers, you could rapidly increase the number of clients from 4 to 24 without too much impact on the overall throughput. Including synchronization ensures that the data is on the disk before returning from the function call and as expected impacted the throughput. However, the general observation of a small number of I/O servers being somewhat insensitive to the number of clients, up the number tested, was still true. The file read access testing exhibited the same trends as the write performance.

The differing results are probably due to the complex nature of optimizing the best number of I/O servers and configuration options. There are two interesting things you can take away from these studies. First, you can "dial-in" your desired performance by adding I/O servers to a number of clients until you reach the desired throughput. This option is cost effective because you only add the number I/O servers needed for a given level or performance. Second, the option of using compute nodes as both clients and I/O servers has been shown to be cost effective, but could also lead to some network congestion if multiple jobs are running at the same time.

Increased Disk Performance

In some cases, people have found that the underlying disk speed is the primary bottleneck. You can tune the disk for improved performance. People have been using the command hdparm for several years to improve the performance of disk drives. See the Resources Sidebar for more information.

Additionally, there is an easy thing you can do to improve disk performance, namely using RAID (Redundant Array of Inexpensive Disks). There are several RAID levels you can use to improve performance. At the simplest level you can use multiple disks in a RAID-0 (striping) configuration. As part of their study, Dell looked at 1 to 4 disks in a hardware RAID-0 configuration. They found that while the number of disks had only a small impact on read performance, increasing the number of disks in the RAID-0 set had a large impact on write performance, particularly for the file synchronization case (synchronizing your data is always a good idea).

You could also use RAID-5 that would also give you some fault tolerance (we'll discuss this in the next column). For increased reliability you could combine RAID-0 with RAID-1 (mirroring). For whatever RAID level you select you can use a dedicated hardware RAID controller or use software RAID that is built into Linux.

Configuring software RAID is rather easy. There are some wonderful HOWTO articles on the web about configuring and maintaining a software RAID set. Daniel Robbins has written two very good articles for IBM's developer website (see Resources Sidebar). If you use software RAID remember that you will see an increase in the CPU usage due to the RAID. However, this impact is traded against improved data throughput from RAID-0. Also, the speed of modern processors and the use of dual processor motherboards usually minimizes the impact for normal operations.

The other option is to use a true hardware RAID card offloading the CPU and minimizing the impact on the node. However, in some cases, the speed of the RAID card is actually slower than software RAID because the CPU in the node is much faster than the processor on the RAID card.

Underlying File system

PVFS is a virtual file system built on top of an existing file system. Thus, the speed of PVFS is affected by the speed of the underlying file system because PVFS relies on the underlying file systems to write the data to the actual disk.

Nathan Poznick, a frequent contributor to PVFS, performed some tests

on PVFS2 and the effect of the underlying file system. He tested

ext2, ext3 (data=ordered), ext3 (data=writeback), ext3 (data=journal),

fs, xfs, reiserfs, and reiser4. The tests were performed with a

single server and a single client (see the Resources Sidebar for a

link to an explanation of the ext3 journaling modes). The server

was running SUSE Enterprise Server 9 with a 2.6.8.1-mm1 kernel and

the client was running Red Hat 7.3 with a 2.4.18 kernel. He performed

two tests, a file creation test, and data test. The file creation

test just used the Linux command C

For the touch test, ext2 was the fastest, followed closely by xfs. Reiser4 was the slowest in the test by a factor of 10. For ext3, the ordered and writeback journaling modes were about the same speed and far faster than the journal mode. For the dd test, jfs was the fastest, followed somewhat closely by ext2 and xfs. Again, reiser4 was the slowest by a factor of 20. For ext3, the writeback journaling mode was a bit faster than the ordered mode, but both were about one-third faster than the journal mode. Nathan admits that the tests are somewhat unscientific in that the file systems could have been tuned to provide better performance. Also, note that reiser4 was probably not a final version.

Even Nathan's simple tests show the effect of the underlying file system on the performance of PVFS.

| Sidebar One: Links Mentioned in Column |

|

First IBM Software RAID article |

This article was originally published in ClusterWorld Magazine. It has been updated and formated for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dr. Jeff Layton hopes to someday have a 20 TB file system in his home computer. He lives in the Atlanta area and can sometimes be found lounging at the nearby Fry's, dreaming of hardware and drinking coffee (but never during working hours).