I usually write a very long detailed summary of the SC, but I'm hoping to make this one a bit shorter. It's not that SC07 wasn't good - it was. It's not that Reno was bad - it was. It's that I'm utterly burned out and still trying to recover. I've never been so tired after a Supercomputer conference before. I actually think that's a good thing (Doug will be expounding on why). If I managed to sleep 4 hours a night, I was lucky. If I manged to actually eat one meal (or something close to it), I had a banquet. But there were some cool things at SC07 and I've hope I do them justice.

What was the IEEE thinking?

If you recall, I thought Tampa Bay was not a good location for the conference. The exhibit floor was very cramped (there were booths out in the hall), the hotels were spread all over Florida, and there was absolutely nothing to do around the conference center. Then some person who scheduled a cigar and cognac party discovered that in Tampa the cigar bars all close on Monday (nudge, nudge, wink, wink, know what I mean? know what I mean?). So I had high hopes about Reno. And let's not forget about leaky roof in the exhibit hall.

The exhibit floor while fairly large was actually split across several rooms. This kind of spread things out a bit, but at least all of the exhibitors were in one place. But, once again, the hotels were spread out. However, the IEEE committee did a good job in providing luxury coaches to and from the hotels and the convention center. On the negative side, if you had a meeting with a company that had a "whisper suite" or meeting room, you were virtually guaranteed to walk a bit since they were all well away from the conference center. All of them were in the hotels which meant that you had to negotiate through the casino maze to find them. Of course this was the fault of the hotels and not IEEE, but I can't tell you how hard it was to wander through the casinos trying to find a specific room. Reno itself had a good number of restaurants and places to go (I went to the Beowulf Bash at a cool Jazz Bar -- nice place).

However, my overall opinion of Reno, if you haven't guessed by now, was that it's not the best place to hang out. So it looks like IEEE is 0 for 2 over the last two years. Next year's SC is in Austin, which I know has lots of good restaurants, places to go, etc. So let's hope that IEEE can pull this one out of their a** because their recent track record is not good (with me anyway). But my intention is not to complain about the location, etc. The conference is about HPC, so let's talk about that.

Themes and Variations

I always try to find a theme in the SC conference but if you read my blogs you will find out that I actually found 3 themes at SC07. They are,

- Green Computing

- Heterogeneous Computing

- The Personal SuperComputer

Green Computing (but why aren't the boxes Green?)

The world seems to be captivated by everything Green (that is after being captivated by the melt down of Brittany Spears). We're all looking for ways to improve our use of natural resources and reduce our green house gas emissions and HPC (High Performance Computing) is no exception. Everywhere you walked on the floor you saw companies stating how they improved the power consumption and cooling of systems. I think I even saw a company that claimed the color of their 1U box was specifically chosen to reduce the power consumption and cooling. While I'm exaggerating of course, almost all companies claimed that their products were redesigned to make them more green. In my opinion, some of these claims were warranted and some were not.

Green Computing will become a mantra for many customers now and in the future and with good reason - power and cooling are reaching epic proportions. Many data centers can't produce enough cooling for the compute density that vendors can deliver. Sometimes the problem is there just isn't cooling air but many times it's not enough pressure to push the cool air to the top of the rack. APC has done a tremendous amount of research and development to understand the power and cooling of high density data centers. For example APC explains that for racks with conventional front to back cooling with power requirement of 18kW per rack (fairly dense rack), the rule of thumb for cooling requirements is about 2,500 cfm (Cubic Feet per Minute) of cooling air through the rack. Also, they go on to explain that a conventional perforated cooling tile for under the floor cooling can only provide about 300 cfm of cooling. This means you need 8 tiles to cool a single rack! On the other hand, grated tiles can provide up to about 667 cfm of cooling air to a rack, only requiring about 4 tiles to cool a single rack. Either way, the cooling requirements are rather large for high density systems.

Don't forget that if you have 2,500 cfm of air going in, you will have 2,500 cfm of hot air coming out. This air needs some kind of return. If you have a 12 inch round duct for the return air, 2,500 cfm requires the air move at about 35 mph! This also means the air coming out of a cooling tile is also going to have a high velocity.

So power and cooling are big deals for today's dense systems. We can and need to design data centers to better cool today's systems. I talked to a few people at the show, and a number of them were asking about liquid cooling. You can get what I call liquid cooled assist devices today from APC and from Liebert. Both devices uses liquid flowing through a device to cool air exiting the rack. Some of the devices are self-contained. That is, the liquid never leaves the device. Some of the devices rely on chilled water inside the data center (somehow this all seems like back to the future when Cray had liquid cooled systems).

So there are many ways to cool a data center - too many to discuss for this article. In addition to more efficient cooling, vendors have focused on more efficient processors that produce less heat.

Transmeta was one of the first to develop products targeted at reducing the power consumption of systems without unduly hurting performance. This lead to companies like RLX and Orion Multisystems using Transmeta processors in HPC clusters. But, unfortunately these companies didn't survive (I like to think that these companies gave their "lives" so to speak, to advance technology for the rest of us).

Today we have SiCortex who is making systems with a very large number of low power CPUs (derived from embedded 64-bit MIPS chips). They installed their first machine at Argonne National Laboratory this year and are working on delivering other systems. The approach that SiCortex has taken is to use to use large amounts of low power and slower CPUs. One of the other keys to their performance is the use of a very fast network to help applications scale to a large number of processors. At SC07 they showed their new SC072 workstation that uses their low power CPUs and networking to produce a box with a peak performance of 72 GFLOPS using less than 200W. While performance may not be earth shaking, the goal of the SC072 is to provide software developers with a platform that has a meaningful amount of cores and is identical their full production system.

The picture below in Figure One shows the inside of a SC072 workstation.

You can see the 12 heat sinks (heat sinks not heat sinks+fans) in the picture. Under each heat sink are 6 CPUs for a total of 72 CPUs. Each CPU is capable of 1 GFLOP of theoretical performance, so you get 72 GFLOPS in this small box. I'll be writing a follow-up article on Personal Supercomputers where I will go into more depth about the SC072. Needless to say it is one cool box (figuratively and literally).

Cluster in a Box

Sun brought one of their "clusters in a shipping container" solutions to the show out in the parking lot. I didn't a chance to get into the shipping container but I peaked inside. It's a very cool cluster idea (again figuratively and literally). You bring it in on an 18-wheel truck, plug in the network, the chilled water and power, and bingo - a cluster. Since they are using chilled water it's a very green solution. Rackable has a similar solution.

Green500

SC07 was the launch point for the Green500. It's a website devoted to listing the top500 most efficient systems in the world. Dr. Wu-chun Feng, previously of Los Alamos and now at Virginia Tech has been a champion of lower power systems and started the idea of the Green500 to promote the idea of "Green Computing." The inaugural list which coincides with the Top500 list being announced, was announced at SC07. The top machine, actually the first 5 machines are IBM Blue Gene/P systems. The #1 system is at the Science and Technology Facilities Council at the Daresbury Laboratory and achieved 357.23 MFLOPS per Watt. The #6 system was a Dell Poweredge 1950 system at the Stanford University Biomedical Computational Facility and achieved 245.27 MFLOPS per watt (this is the highest ranking cluster on the list). The lowest ranking machine was ASCI Q, which is an old Alphaserver system, at Los Alamos. It achieved only 3.65 MFLOPS per Watt (ouch!).

This initial list is built from the November 2007 Top500 list. So it gives us, the HPC community, a good baseline for starting a Green500 list. In the future I hope that the list will expand to include smaller machines such as Microwulf. I think people will be surprised how power efficient smaller machines can be. Particularly if they are diskless nodes.

Heterogeneous Computing

The second trend I see is somewhat opposite to Green Computing, but still has its merits if it works for your application. Since we are fundamentally limited by the same CPUs, hard drives, interconnects, and memory, the power consumption of the core systems is about the same. Also, CPUs clock speeds are slowly increasing, but no where near the previous rate. Many people are looking additional types of hardware for ways to accelerate baseline performance. This trend is often referred to as Heterogeneous Computing.The current major contenders for Heterogeneous are,

- FPGA's (Field Programmable Gate Arrays)

- Clearspeed

- GPUs (Graphic Processing Units)

- Cell processor from IBM and Sony

All 4 are devoted to providing great leaps in processing capability, at a good power/performance point, and hopefully, at a good price point. With exception of the Cell processor, however, none of these technologies are designed to operate as stand alone systems. i.e. They all need a host of some sort.

All of these technologies (and companies) are trying to provide increases in computing power in different ways. I think I've said this before, but a couple of years ago, my bet was placed on GPUs. The reason for my bet is simple - commodity pricing. Commodity pricing brought down the big iron of HPC and commodity pricing is doing wonders for the electric car industry. So I think commodity pricing can hep GPUs become the winner in the accelerator contest.

The other three technologies, FPGA's, Clearspeed, and Cell processors are either very niche products, in the case of Clearspeed, or somewhat niche products as in the case of Cell processors. At the highest end, Cell processors are sold in perhaps the hundreds of thousands or low millions due to their use in the Sony PS3. But GPUs are sold by the millions every year (last year Nvidia sold over 95 million GPUs). The crazy gamers out there who have to have the latest and greatest fastest GPU(s) so they can enjoy their games, have been pushing the GPU market really hard the last several years. Plus people now have multiple machines in their homes - multiple computers and game consoles - all of which have GPUs in them. So this means that GPUs have become commodities. I can go into any computer store anywhere and find very fast graphics cards. Heck, I can even go into Walmart and find them! (when you're in Walmart, you have arrived). So Nvidia and ATI (AMD) can spread development costs across tens of millions of GPUs, allowing them to sell the cards for a low price. God bless those gamers.

The other technologies simply don't have this commodity market working for them. This means they have to spread their development costs over a much, much smaller number of products, which forces the prices way up. This is why I think that GPUs will be the winner in this accelerator contest. Also, I'm not alone in this belief.

I think everyone saw the AMD announcement about a double precision GPU card that does computations. The board has 2GB of memory (the largest that I know of with GPUs), uses 150W of power (while it sounds like a lot, it isn't too bad), and costs $2,000 (that's a bit out of the commodity range). In addition, AMD is going to finally going to offer a programming kit for the GPU. They will be offering a derivative of Brook called Brook+.

Nvidia was showing their Tesla GPU computing product. I stopped by the booth and I was amazed. They were showing off a 1U box that had four Tesla's on-board that provide well over 1 TFLOPS in performance. Here is a picture of one.

Just behind the 1U box on the left hand corner you can see a Tesla card and you can see the back of the card. Notice that there isn't a video connection :) You can also connect multiple 1U boxes using what I think is a PCI-e connector. Here's a picture of a Tesla cluster as well.

Looking at the bottom of the rack (it's a half-rack), you can see the row of fans in the front of the Tesla 1U nodes. You will also see four 1U nodes in the rack. I'm not sure what the other nodes are in the rack. The Tesla nodes are connected via a PCI-e cable.

Nvidia has released a free tool for programming called CUDA. It's available for free and uses basic C commands with new data types. Basically CUDA is a compiler that compiles GPU specific commands and spits out non-GPU code that you can compile with whatever C compiler you want. I spoke with a couple of their developers that I know very well. They say it's very easy to write code with CUDA. These guys are very bright (actually extremely bright) so your mileage may vary, but in general, I trust their opinions. There are even some rumors of some kind of Fortran extensions for CUDA. So go out and get your G80 or better card and start coding!!

Commodity CPUs are Getting FAST

While HPC is already about performance, the other thing that I think is significant at SC07, is that Intel announced their Penryn family of chips. While normally this isn't such a big deal, the benchmarks that Doug Eadline, our fearless head monkey, has run indicate that it an significant improvement in performance over past quad-core designs. Doug's benchmarks were sponsored by Appro. Look at Appro's website for a copy of the paper. It's truly amazing.

I see these benchmarks as being a new trend in commodity processors. The CPU manufacturers are introducing changes into their chips that are really the HPC community. Look at the fact that SGI and Cray are using commodity processors in some of the products. Commodity CPUs are getting FAST.

The next generation of Intel processors, currently with the code name Nehalem, look to be faster still. They will feature an on-board memory controller, a new on-the-board interconnect called QuickPath (somewhat like Hypertransport), and up to 8 cores per socket. The rumor is that Nehalem will be an amazing performing CPU. I've even heard rumors that it will rival IBM's Power6 CPU. All of this at a commodity price - wow!. We'll have to wait for real benchmarks, but the promise looks great (To quote a famous line from a movie, "you can wish in one hand and cra* in the other - which one will you get first?" - can anyone tell me what movie that is from?).

Variations

I saw a few other things around the floor that impressed me but didn't really fall into the "themes" I've previously mentioned. I'm going to mention two in this article that impressed me.

Flextronics and the Personal Supercomputer

The first one is a work group personal cluster that was developed by, of all vendors, Flextronics. Flextronics, is one of the largest manufacturers in the computer world. They make motherboards, cases, and systems for almost every vendor you can think of at SC07. Most of them don't admit it but they do. Flextronics also can design components for vendors. The have honed the ability to manufacture, ship, and support components to a fine art. There aren't many, if any, companies that can do it better. So, it's very interesting that they are now developing systems (why not? They do all of the other pieces extremely well).

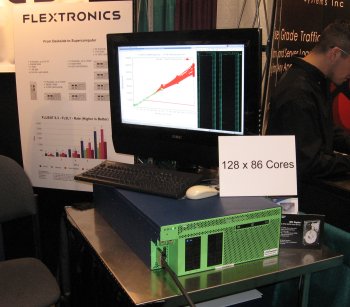

They have designed a chassis that has, I think, four two-socket boards inside. The picture below in Figure Four shows the chassis.

You can see the Infiniband cable coming out of the front of the case. It connects to a chassis that is below the one in the picture.

If you put quad-core processors in each socket, then you have 32 cores per chassis. Flextronics was showing 4 chassis for a total of 128 cores. They were also running ScaleMP on the system. ScaleMP has a product referred to as vSMP that allows you to connect several systems together to make them appear as a large SMP machine. You don't have to use MPI to code for distributed nodes. Using vSMP allows the disparate nodes to appear as one large machine. The plot on the screen above the chassis shows the memory bandwidth as a function of the number of cores. To me the plot looks fairly linear. I'll have to look at ScaleMP a little closer.

I'm working on an article about Personal Supercomputers (PSC) that give a little history of PSC's and present current PSC's. Look for it at a ClusterMonkey near you.

Verari and Storage Blades

Verari is an HPC vendor that has expanded beyond just HPC, but still always pays attention to it's HPC roots. I always enjoy going to their booth and seeing what new things they have cooked up. When I stopped by and this year it was no exception. Verari is one of the pioneers in the commodity blade area. They also utilize vertical cooling in their HPC systems since, guess what, hot air rises, so just take advantage of natural convection to push the hot air upward and out of the rack. Over the years their blades have evolved and at SC07 I got to see one of their new storage blades as show below.

The top blade in the image is the storage blade. It's mounted just like it would be in a rack.

The blade in the image shows a total of 12 SATA drives that are 1 TB in size for a total of 12 TB in a blade. From what I understand you can connect this storage blade via a connector to a node with a RAID card. I also think that the drives in this blade are hot-swappable. Very nice design.

One word of caution though. This is about the limit that you want connected to a single RAID card (12TB). While I have a file system article coming to ClusterMonkey that explains this in more detail, the fundamental reason is that if you lose a drive, in the case of RAID-5, or 2 drives, in the case of RAID-6, you are almost guaranteed to hit a read error while reconstructing the data. This will cause the reconstruction to fail and you will have to restore all of the data from a backup. Restoring 12TB of data from backup will take a long time.

SC07 - I've Recovered (I think)

It's been at least a week since SC07 and I think I've recovered. My sleep pattern was a mess but I think I've straightened that out. I still hear the faint sound of slot machines in my nightmares. I'm looking forward to SC08 and I'm going to definitely make more time to walk the floor and visit more booths, more friends, and my pillows much more often. I hope to see you there!

Jeff Layton is a cluster enthusiast who travels the world writing about clusters while trying to stamp out cluster/HPC stupidity where ever he can find it (it's definitely out there). He can be reached This email address is being protected from spambots. You need JavaScript enabled to view it..