A Primer of Queuing and Scheduling

After a few months of reading about clusters on Cluster Monkey, you've gotten your cluster hardware, assembled it, selected a distribution, installed it, checked to make sure your nodes are up and running, learned some parallel programming, and written the highest performance "Hello, World" the universe has ever seen. So, you figure you're ready to turn your machine loose to your users, and amazing jumps in productivity are about to be seen.

In the immortal words of ESPN's Lee Corso, Not so fast, my friend!

Unless you're fortunate enough to be your cluster's only user (the ever growing Beowulf-in-the-basement crowd), selecting and configuring the appropriate resource management software is an absolutely critical step in administering your cluster. Outside of your choice of hardware, few things you do will have as big an impact on the user experience and performance your cluster provides.

In this column, we're going to start examining resource management with a look at the basic concepts of queuing and scheduling and why they are so important. Next month, we'll take a quick look at the resource management options available, get into the details of configuring and using this software, and give some examples of how some large clusters around the U.S. are run.

A Resource Management Primer

Resource management is not a problem that is unique to clustering (in fact, it's not unique to computing). In your workstation, keeping track of which processes are waiting to run, and selecting which one to run next, is one of the core functions of the operating system. The OS still serves this function in your cluster, however, the OS schedules each compute node independently of all others. In the cluster world, resource management is about deciding which jobs run on which of you compute nodes, and when.There are three basic components to resource management: queuing, scheduling and allocation. In actual implementation, the line between these components is often blurred. Allocation is simply the process of actually granting access to processors to a particular job. For instance, if you run a Scyld cluster, the bproc software takes care of the allocation. The queuing software keeps track of the jobs that are waiting to run. However, its job is more complicated than it sounds. The queuing software must dispatch jobs by interacting the allocator (or function as it), it must interact with the scheduler (or function as that too), and it must provide an interface to the users for submitting jobs and getting results back to them. In that sense, the queuing software can be the most critical part of your user environment.

The scheduler may be the most complicated piece of your resource management system. In the simplest since, the scheduler reorders the queue (or at least decides which job is at the head of it). This can be as simple as choosing to run the jobs in the order they are submitted. In reality, scheduling algorithms can be much more complicated. The popular and feature rich Maui scheduler has tens of thousands of lines of code dedicated to the scheduling algorithm itself.

In practice, setting up a scheduling system is not as simple as selecting one from each of these three categories. Your choice of cluster distribution may constrain you, and the available software may implement one, two, or all three of these functions. For instance, the popular PBS (Portable Batch System see PBS-Pro, OpenPBS, and Torque) family of resource managers is primarily a queue, but also has a built-in simple scheduler. This scheduler can be deactivated and replaced with Maui. Platform Computing's LSF (Load Sharing Facility) is designed as a complete resource management system, but the allocation piece can be handed off to bproc, and, although it contains a sophisticated scheduler of it's own, it can also be convinced to interact with Maui.

Why Bother with a Scheduler?

At this point, you might be asking if all this is really worth the trouble. Why can't we simply let people run their jobs? The answer, like in so many questions about clusters, is performance. In many (though certainly not all) cluster applications, you'd like jobs to have exclusive access to processors. Part of this is simply for bragging rights; you'd like jobs running on your cluster, especially parallel jobs, to go faster than they would on a single processor. But the answer runs much deeper than that. One complication is that processors are not the only limited resource in a computer system. If you are running parallel jobs, there's a pretty good chance it's because you need to solve fairly large problems. And if you are solving large problems, there's a a fair chance you are using a lot of memory. While modern operating systems are pretty good at switching between processors, memory is still a finite resource. The performance price for running out of physical memory can be large. If you issue two equal size jobs on the same compute node, and the combination of the two causes you to run out of physical memory, the total running time for the two jobs may be much larger than if you issued them one at a time.

Ensuring adequate memory capacity (or network bandwidth, or I/O capability) for your job makes a strong argument for restricting your cluster to one job on given compute node processor at a time, but it doesn't argue for a complicated scheduler. You could achieve exclusive access by simply running a job, waiting for it to complete, then starting the next job in the order they were received. But scheduling can still do far more for your performance. To fully explain this, let's define some basic performance metrics. Utilization is the amount of available processor cycles that are used doing useful work. So, whether you use all of your processors half the time, or half your processors all the time, your utilization is 50%. Throughput is the measure of how many jobs you complete in a given time period. If you are running a large cluster and selling access by the job, this is the metric you would care about. But your users may disagree. To them, the metric that matters most may be average turnaround time, which is how long from when they submit their job until they get a result back (another popular metric I won't use here is waiting time, the time a job spends waiting in the queue; waiting time plus execution time gives you turnaround time).

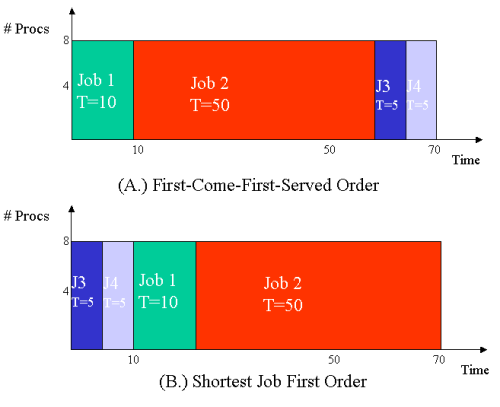

Let's look at a simple example. Suppose you have a small, 8 node cluster with single processor nodes, and suppose you are fortunate enough to have jobs that all require 8 processors, but the time to execute these jobs vary. Suppose at a given time four of these jobs are sitting in the queue waiting for execution, with a 10 minute job first, a 50 minute job second, and two 5 minute jobs after that. Figure One (a) shows a simple line graph of what would happen if these jobs were executed in the order received, a First-Come, First-Served (FCFS) scheduling algorithm. In this example, utilization is 100%, and you complete all four jobs in 70 minutes, so your average throughput is 1 job every 14 minutes. Since all processors were is use all the time in this ideal case, it's impossible to improve utilization and throughput. So, what's left for a scheduler to do? Well, let's look at the turnaround time. The first job completes 10 minutes after the start of our run. The second one completes 50 minutes later, or 60 minutes after the starting time, the third at 65, and the fourth after 70 minutes. So, our average turnaround time is (10 + 60 + 65 + 70)/4, which means each of our users waited on average 51.25 minutes for their jobs to complete. Now, let's try the same jobs with a different scheduling order. In Figure One (b), you'll see the same four jobs run Shortest-Job-First (SJF). Utilization is still 100%, throughput is still one job every 14 minutes, but now, the average turnaround time is (5 + 10 + 20 + 70)/4, or 21.25 minutes. So, on average each user spends a half hour less waiting for their results. A cluster running the SJF scheduler is going to seem much faster!

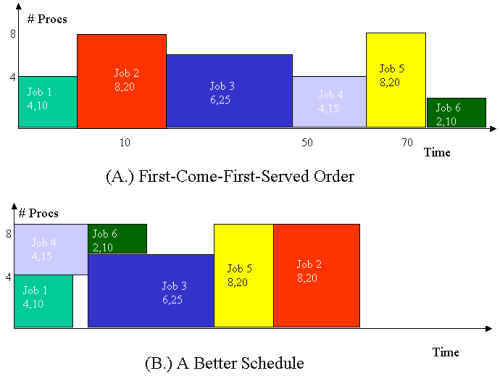

Most situations aren't quite so neat when you are dealing with clusters, as it is unlikely that each job will need the whole cluster. So, when deciding which jobs to run next, the scheduler needs to take into account both the number of processors the job needs and how long it is likely to run. Figure Two shows two scenarios with jobs of various size in both these dimensions. The top graph show a FCFS execution of these jobs, and the bottom shows a better schedule. Here, the scheduling isn't purely shortest job first, but rather a more complicated system where multiple jobs may be active at the same time (though still only one task on any given processor). The schedule shown in Figure Two (b) improves not only turnaround time, but utilization and throughput as well.

Real schedulers have a much harder problem. In both the examples above, I made two completely fallacious assumptions; that all jobs had arrived in the queue at the time the scheduler planned how to schedule them, and, more importantly, that the scheduler knew with perfect accuracy how long jobs would take before it executed them. In practice, schedulers must be able to accommodate new jobs arriving at random times, and wildly inaccurate user estimates of runtime. Plus, different jobs might differ in importance, and jobs may crash or hang. Most modern resource management systems also have more than one queue, to separate jobs of different length or different priority (for instance, to separate paying clusters from your ongoing search for extra-terrestrial intelligence). But even these simple examples show that the choice of scheduler can have a huge impact on the performance of your cluster.

Conclusions

This column has looked at the fundamental concepts of resource management, and hopefully given you some insight into why this is an important issue. Next month, we'll talk some more about available scheduling software, including the packages mentioned in this article (PBS in it's various forms, Maui, and LSF) along with Sun'sGridEngine, IBM's LoadLeveler, and perhaps a few others. We'll get into the critical issue of user interface, and show some quick configuration examples.This article was originally published in ClusterWorld Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dan Stanzione is currently the Director of High Performance Computing for the Ira A. Fulton School of Engineering at Arizona State University. He previously held appointments in the Parallel Architecture Research Lab at Clemson University and at the National Science Foundation.