A look at the history and state of some cluster distributions

Note: The information in the article is somewhat dated, check the Cluster Plumbing links for more updated tools.

Welcome to Cluster Monkey and the Cluster administration column. The theme of this column is clusters from a system administration point of view. Sure, you can create all kinds of fantastic applications on your cluster, but that doesn't do anyone any good unless you can log in!

The cluster administrator has some unique challenges, that your normal everyday system administrator doesn't face. For starters, you are likely to be responsible for many more machines. While you may have one cluster, that cluster may consist of hundreds of individual nodes. From a hardware perspective, maintaining 512 cluster nodes is just about as hard as maintaining 512 individual workstations. Nothing about putting machines in a cluster makes disks, fans, or power supplies less likely to burn out. From the software perspective, though, running a 512 node cluster can be a lot different than running 512 workstations. There are really two facets to cluster administration, things you must do on a per-node basis, like fixing disk drives, and things you must do only once on a per-cluster basis, such as (hopefully) adding new users. The goal of your cluster distribution or management software, and your goal as an administrator, is to turn as many per-node administration tasks into per-cluster tasks as possible.

Over the last few years, cluster administrators have responded to these challenges and become an increasingly sophisticated bunch. However, for every administrator out there trying to tweak the last few bits per second out of their infiniband drivers, there seem to be several (at least judging by the traffic on the Beowulf mailing list) who have a big pile of machines and are wondering what they can do with them. So, in this column, we're going to start in the beginning, with what kind of distribution you want to use for your cluster, and work our way forward through all the topics cluster administrators care about. In months ahead, we'll plow through a few of the more mundane tasks like installation and updating packages, move through some of the more cluster specific tasks like monitoring, queuing and scheduling, and file system alternatives, then move on to the more advanced world of dealing with faults and tuning network performance. Along the way, we'll solicit the opinions and best practices of many of the top cluster administrators around the country.

Rolling Your Own

Perhaps the most common way to build clusters, especially small ones, is by simply using a standard Linux distribution, such as Red Hat, Fedora, SuSE, or Debian. Many people are familiar with these distributions, so using them is a way too "ease into" clustering. Most distributions come with some version of message passing software included, so almost no extra effort is needed to get up and running initially. Of course, this is just about the worst case from an administration point of view; not only must you replicate the full work to install a single machine on each node of your cluster, you must repeat every administrative task on each node in your cluster.

Naturally, solutions like this don't live long in a community of hackers, so many tools to make things easier quickly started appearing. Solutions to the install problem emerged in the broader market, where single administrators were having to manage hundreds of headless systems for server applications, as well as for clustering. The addition of PXE boot capabilities to the BIOS of most motherboards, the widespread use of DHCP, and the creation of network install tools like Red Hat's kickstart made it possible to rapidly do large numbers of identical installs. These tools were a boon for cluster installation, as described in another column. But once the initial installation was completed, none of these tools helped with day-to-day administration.

This void was quickly filled by both vendors and the open source community, with lots of scripts designed to handle common administrative tasks. The scripts grew in complexity, and custom GUIs soon appeared. Many open source tools for handling one aspect or another of system administration can now be found on sourceforge, and virtually every cluster vendor now distributes there own custom suite of management tools. Many of the vendor specific suites continue to grow and have taken on some of the characteristics of the more sophisticated systems discussed below.

The Second Generation - Beyond Scripts

As clusters got larger, and the number of software packages used on them increased. The use of a standard distribution plus a collection of scripts became less practical. Some people realized that keeping track of the configuration of the software on cluster nodes, and the nodes themselves, would eventually require a more sophisticated approach. The first of the true cluster distributions began to appear. These distributions featured all the aspects of a normal linux distributions, but were cluster-aware; they featured install programs specifically tailored to clusters, database systems for maintaining the configuration of your cluster, batch queuing systems, cluster monitoring software, and other cluster specific features.

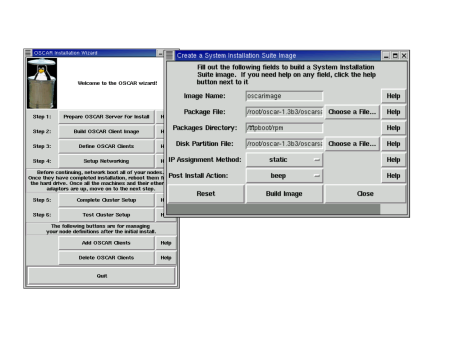

The two most well-known and successful of these cluster distributions are OSCAR and ROCKS. The OSCAR, or Open Source Cluster Administration Resource project was started at the DOE's Oak Ridge National Labs, and OSCAR and it's variants are now maintained by a consortium of folks known as the Open Cluster Group, which includes the original developers from Oak Ridge as well as a number of contributors from other labs and universities. OSCAR is designed for clusters from the ground up. OSCAR provides a cluster-aware install tool (shown in Figure One), which handles the installation of packages on your nodes, as well as setup of users, accounts, etc. In addition to all the standard packages (at this time, OSCAR by default installs packages from RedHat 7.3, though Mandrake support is also available, and the latest betas support newer versions), OSCAR also provides a number of cluster-related packages designed specifically to work with OSCAR. Notable among these from the administrators perspective are C3 and Env-Switcher. C3 is the Cluster Command & Control suite, which provides a rich set of commands for managing files, running programs, or executing commands across all or some of the nodes in your cluster. Env-Switcher allows you to install multiple versions of the same tool, and easily switch the default version on all nodes.. This can be extremely useful, for, say switching between compiler versions or different implementations of MPI. We'll talk more about both these tools in a later column. The OSCAR project has spawned several child distributions focusing on particular segments of the cluster world, such as thin OSCAR, for diskless cluster nodes, and OSCAR-HA (high availability) with fail over capability.

The Rocks project began at the San Diego Supercomputer Center, with collaboration from the Millenium project at UC-Berkeley, and now has a large group of maintainers involving labs, universities, and companies from around the world. The Rocks user registry currently shows 168 clusters registered with the Rocks project, ranging from small clusters in high schools to very large clusters at national labs. Like OSCAR, the strength of Rocks is the installation and SQL-based configuration database.

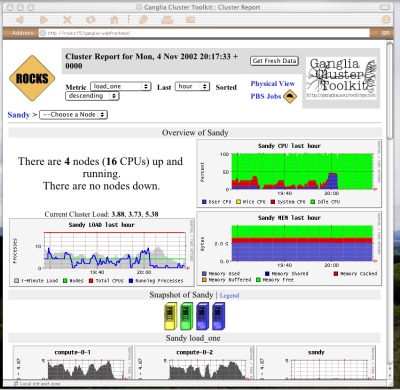

Rocks also provides a full suite of tools for running your cluster, including queuing and scheduling software, the usual MPI packages, tools for simplifying administration, etc. Rocks features the Ganglia cluster monitoring system, developed in many of the same labs that developed Rocks (although Ganglia is now available in other distributions as well). A screenshot of Ganglia is shown in Figure Two, showing memory and processor usage on cluster nodes. Ganglia is highly configurable, and supports collection of a number of different kinds of data (Ganglia will get more attention in a future column as well). The newest versions of Rocks include support for the latest 64-bit processors, including the Opteron and IA-64 chips.

Rocks and OSCAR have brought administration of clusters a quantum leap forward. Where administrators were previously left using a standard Linux distribution, then cobbling together a collection of home-grown and downloaded scripts to build a cluster, now they have full-featured software that comprehensively addresses the problem of running clusters. Perhaps even more importantly, OSCAR and Rocks have created support communities for cluster administrators. Like so many open source projects, there are mailing lists, FAQs, and web pages filled with information on running these tools. A number of commercial vendors have started distributing OSCAR, or Rocks, or both, and these vendors can provide professional support all of your cluster software.

The Third Generation - Re-envisioning the OS

While most of the second generation solutions are still effective means of using and running even large clusters, they still fundamentally rely on an OS designed for a single system. A few groups of researchers have attempted to re-envision the basic functions of an operating system to tailor it specifically to a cluster environment. One of the most significant innovations in the cluster OS space is the bprocdistributed process space, as described in detail in a another column. The bproc concept grew out of the original Beowulf project at NASA's Goddard Space Flight Center. Scyld Computing (a subsidiary of Penguin) sells and supports a the bproc system. Scyld also provides the beoboot system for booting and maintaining clients with a minimal OS, plus associated resource management and administration tools. If you purchase the Scyld version, you also receive extensive documentation and professional support, as well as a few more GUI tools for management.

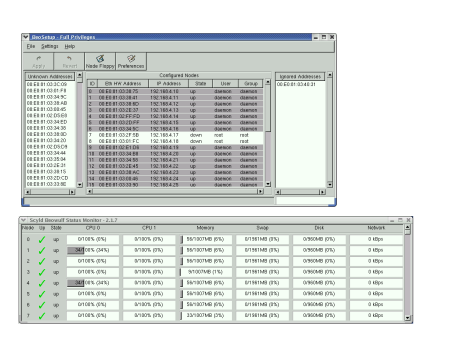

Figure Threeshows the Scyld setup and node status graphical tools. Both bproc distributions make administration of large clusters much simpler. The minimal OS running on the nodes and the capabilities of bproc remove most of the problems of maintaining user accounts, authentication, consistent versions of libraries across nodes, etc.

The simplicity of maintaining clusters with this dramatically different approach comes at a cost, however. Since bproc systems are so different and not yet extremely widespread, there is sometimes a lag before the latest versions of your favorite MPI implementation, scheduler, or commercial application become available. Since bproc changes some fundamental assumptions about the OS, there is frequently some porting to do, and takes time for the busy engineers at Scyld to keep up. Since there is not yet general agreement on where the responsibilities of, for instance, the OS kernel end and those of the message passing libraries begin, there are occasional clashes. The latest MPICH versions provide a daemon for high-speed process creation, but bproc itself provides this functionality. Differences like these will take time to iron out. However, if you do not need the absolute latest version of every tool, the Scyld methodology can let you run very large clusters with a relatively small effort.

Another substantial re-envisioning of the cluster OS is the Open MOSIX approach. MOSIX provides a set of kernel patches, which, like bproc provide for automatic migration of processes from the head of your cluster to the computing nodes. MOSIX uses a set of algorithms to attempt to balance the load on all the nodes of the cluster, assigning and moving processes as it sees fit. MOSIX will migrate processes around your cluster to balance CPU, memory usage, or I/O usage. MOSIX has some of the same drawbacks as the bproc systems; it's different enough from the normal Linux model that certain packages, for instance resource managers and schedulers, won't work right away with MOSIX clusters, although MOSIX provides it's own alternatives for these functions.

Much more research work is being done in the cluster OS space, and new ideas, tools, and distributions spring up all the time. Next time, we'll hit a few more and get more in detail about installation procedures for some of these tools. Happy clustering.

| Sidebar One: Resources |

| Rocks |

This article was originally published in ClusterWorld Magazine. It has been updated and formatted for the web. If you want to read more about HPC clusters and Linux you may wish to visit Linux Magazine.

Dan Stanzioneis currently the Director of High Performance Computing for the Ira A. Fulton School of Engineering at Arizona State University. He previously held appointments in the Parallel Architecture Research Lab at Clemson University and at the National Science Foundation.