Get ready. Software will get installed, configuration files will get edited, nodes will boot, lights will blink, messages will get passed, and we will have a cluster. Joy.

If you are following along from part one of this series, you should be chomping at the bit to get some software on the cluster. Not to worry, in this installment will get those switch lights blinking. The entire cluster measures, 40 inches (102 cm) high, 24 inches (61 cm) wide, 18 inches (46 cm) deep and is shown in Figure One Below. (See part one for more and bigger pictures). It is also quite mobile (check out the wheels!). There is a single power cord and room on top of the rack to place a monitor and keyboard (only if needed). The DVD reader/burner can accommodate vertical loading as well.

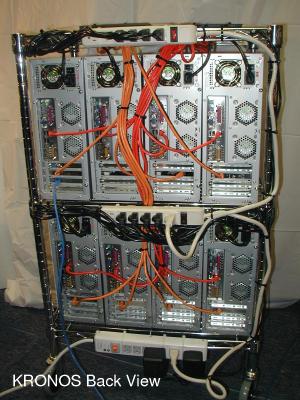

Although the font view has a certain clean look to it, the back of the cluster is where the wiring details can be seen. In Figure Two (below) you can see the how most of the wiring (both network and electrical) was done. Clean wiring is possible, but it does takes a little time, patience, and big bag of "zip-ties." The white cords are the power-strips (three total), the black cords are the power cords for the nodes, the red cables are the Fast Ethernet cables, the orange cables are the Gigabit Ethernet cables. The single blue cable is the local LAN connection. Note: the middle two nodes are missing their Gigabit NICs (they were DOA). The black bands around the cables are plastic 7 inch "zip" wire ties.

Don't worry if your cluster does not look exactly like the pictures. Customize it to your needs. After all, that is what clustering is all about.

In this installment, we are going to load up the software. This step will probably be the most challenging. Although we will provide step by step procedures, hardware problems (if any) usually show up at this point. Once we get past the initial software installation and start booting nodes, then we will have a common "baseline" from which to proceed. We need to start at the lowest level first and set up the BIOS.

BIOS Settings

There are few BIOS setting that will help improve cluster functionality. The easiest way to set up the BIOS is to move from node to node with a monitor and keyboard. If you are lucky enough to have a KVM (Keyboard/Video/Mouse combiner) the you can attach it to the cluster. Note, however, that after installing the software you should only need to attach a monitor to the compute nodes when there is a problem.When the node boots-up press F2 several times and enter the BIOS screen. Don't worry if the date and time are incorrect. We can set these from the command line once the nodes are up and running. Move to the Advanced menu item on the top of the screen. Move down and select the Chipset Configuration item and hit enter. You will see an option for Onboard VGA Share Memory. Set this to 32MB. This option reduces the shared video memory to the minimum value.

Next, use the Esc to move back up to the main menu. Move to Power and select Restore on AC/Power Loss and set it to Power On. This setting will allow the node to be controlled by externally shutting the power off an on. Otherwise you may find yourself having to push eight buttons every time you want power cycle the cluster. Next move down to the PCI Devices Power On option and select Enabled. In a future installment, we will show to use the "poweroff" command and "wake-on-lan" to automatically control the power state of each node in the cluster.

Finally, move over to the boot menu. Move down to Boot From Network and select Enabled. Without this setting, the nodes will not be able to get their RAM disk image from the master node. (See Sidebar One). To exit, move to Exit and select Exit Saving Changes and the system will reboot.

| Sidebar One: The Warewulf Cluster Distribution | ||||||||||||||||||||||||

Figure Two: Back View of Kronos In deciding which software would be best suited for a small personal cluster we chose the Warewulf distribution (see the Resources sidebar). Warewulf had many nice features that allow you to manage the cluster. We chose to use disk-less nodes to keep our cost low, but also to eliminate version skew and excessive image copying required by many other cluster distributions. To keep things simple, Warewulf uses a small ramdisk (hard disk emulated in memory) on each compute node to hold the minimum number of files required to run the node. Once booted NFS is available for mounting things like /home or /opt. Warewulf is based on building a Virtual Node File System (VNFS) on the master. Once you have this files system built, it is packaged and sent to the computer nodes when they boot up. The VNFS image can be made very small (30-40 MB) as it contains only what you need to run codes on the nodes. If you run a du -h on a compute node you will see something like the following:

The amount of files (libraries and executables) to "just run binaries" on the compute nodes is surprisingly small. The advantage of this approach is that the nodes are managed from one place (the master node) and will not develop "hard drive personalities" that make administration difficult. In addition, nodes can be quickly rebooted with different kernels, libraries, etc. without having to wait for hard drive spin up, file system checks, or other overhead. We will talk more about the Warewulf concept in future installments of this series. Of course the VNFS "eats" into the available RAM on the computer nodes, but we believe that memory density will continue to increase and cost will continue to drop so that a 50-60MB RAM disk will be become a small percentage of available memory. Note, we also give up 32 MB to the video system, but the same argument applies, RAM is cheap, the incremental cost of adding 128 MB more of RAM to cover that lost to both the video and ramdisk is less than $20 per node. Finally, it is important to note that everything on the compute nodes is lost upon reboot. All the configuration aspects of the nodes are managed from the VNFS on master node and not by editing configuration files on the compute nodes. Some users are surprised to find that vi is not installed on the compute nodes. |

The Base Installation

By itself Warewulf is not a complete cluster distribution. It requires an underlying Linux install to be useful. For this project, we have chosen to use the Red Hat Fedora Core 2 (FC2) distribution. This distribution is both widely available and widely documented. Finding help for FC2 should not be too difficult.We also started using FC2 when we began the project. Although FC4 is out, we thought it would be wise to stick with with the version we have tested. There is also a bit of a chicken-and-egg problem with downloading and creating the FC2 DVD. If you have another computer with a standard CDROM, but no DVD burner, then you will have to make multiple CDROMs. No big deal, but you will need to wait until you get Fedora installed before you can use that new DVD burner. In any case, you may need to do some installation gymnastics to get FC2 installed. Also, we found growisofs to be a good tool for burning DVD images.

| Sidebar Two: Kronos has Arrived - Doug's Thoughts |

| From my experience working with cluster builders and users, the most difficult part is often figuring out what to name the cluster. After I got the value cluster on the wire shelves, an image from on old sci-fi B movie popped into my head. The movie was called KRONOS and it depicted a big cube type thing from outer space. Of course, it did what all things from outer space do when they land on earth -- start destroying everything in sight. I'm hoping the newly christened Kronos cluster will be a bit more tame. |

You can install FC2 just about any way you want (within reason) of course. We chose to install it using the workstation configuration. There are a few customization points along the way where you will need to make some choices. If you are new to the process, you may wish to consult the Fedora website or some of the books on Fedora Core 2. We chose, after adding 512 MB swap partitions to each drive, to configure the remaining space as a RAID 1 partition (mirroring). If you prefer, you could use a RAID 0 (stripping) and get about 300 GBytes of storage. The RAID 1 partition gave about 151 GBytes of mirrored storage on the two drives.

When the installation asks about Ethernet connections, you should see three interfaces listed. (eth0, eth1, eth2) the first interface eth0 will be the local LAN connection and should be the additional 100BT NIC that was added to the master node. (Recall the master node has three NICs; the on-board 100BT used for cluster administration, an Intel 1000BT NIC used for computing, and an Intel 100 NIC to connect to a LAN) Configure eth0 for your LAN (DCHP or a static IP address). For eth2, which is a fast Ethernet connection that we will use for booting the nodes, NFS, and administration, we used an IP of 10.0.0.253 with a subnet mask of 255.255.255.0. For eth1, which is the Gigabit Ethernet connection, we chose an IP of 10.1.0.253 with a subnet mask of 255.255.255.0. The hostname was of course kronos. We also entered the gateway as 192.168.1.1, but this really depends upon how your cluster is connected to the outside world.

You will then be asked questions about the firewall. Make sure it is enabled and select the services that your want to allow to pass though (we chose http and ssh). In the "allow traffic from window" click eth1 and eth2. The firewall will consider these secure networks and let everything pass. (These interfaces are the internal cluster networks).

Once you finish up a few more questions, (use grub as your boot loader), Fedora should be installing on your master node. Again, assistance with Fedora installations can be found on various places in the Internet or from some of the hefty reference books. Now it is time to take a look at Sidebar Three.

| Sidebar Three: Fedora will not boot, now what? |

|

If you are using the same hardware as described in chapter one,

there is a good chance FC2 will install, but when you boot it

will die. Welcome to the &*#$* edge. The problem has to do with

the video card driver. Here is how to fix it for now. Later we

will update things and get X running properly.

When the system boots, and you see the Grub screen, press "e" (edit) and move to the line (use cursor keys): kernel /boot/vmlinuz-2.6.5-1.358 ro root=/dev/md0 rhgb quietBackspace and remove the text rhgb quiet and replace it with single. Hit Enter, then "b" for boot. The system will then come up in single user mode. Edit the file "/etc/inittab" and change the line id:5:initdefault to id:3:initdefault. Next, edit the file /etc/grub.conf and remove the rhgb quiet from the "kernel" line. Save the file and reboot. The system should now come up in text mode and you can get on with the installation. |

Warewulf is a very good cluster system for a number of reasons. One of them is that it creates a ramdisk that installs a vastly cut down version of the master node operating system. (Note: a ramdisk is a chunk of memory that has a file system created on it as though it were a hard disk). The size of the ramdisk really depends upon what is needed on the compute nodes, but the one that gets created for this cluster is about 60 MB in size.

Warewulf creates a file system that holds what is to be installed on the compute nodes. This file system, called the Virtual Node File System (VNFS), contains all of the files that Warewulf needs to place in the ramdisk on the compute nodes. Once Warewulf is installed on the master node you will see the VNFS in the directory /vnfs/default.

To build the VNFS, Warewulf may need access to the rpms from FC2. You can either use the Internet to fetch these files or you can load them into master node. In the later case, you will have to download all of the rpm's from the CDROM's onto the master node hard drive. If you installed from a DVD then you can either use the single DVD or the Internet. In any case, yum will need access to a FC2 rpm repository.

If you used CDROM's to install FC2 and your machine is not on the Internet (with a broadband connection), you will need to copy the contents of all CDROM's to a directory on the hard drive. Remember if you are connected to the Internet, this step is not required. First make a local directory to store the rpm's.

% mkdir /usr/src/RPM_LOCALThen insert disk 1 into the drive and run the following commands.

% mount /mnt/cdrom % cp /mnt/cdrom/Fedora/RPMS/*.rpm /usr/src/RPM_LOCAL % umount /mnt/cdrom

Once the machine is done copying the rpm's, remove disk 1. Repeat these last 3 commands for disks 2, 3, and 4. Then you modify the file /etc/yum.conf to reflect the location of the yum repositories (we haven't created them yet, but we will). The two entries in the file, base and updates-released should look like,

[base] name=.... baseurl=file:///usr/src/RPM_LOCAL [updates-released] name=... baseurl=file:///usr/src/RPM_LOCALIf you have the master node connected the Internet, then you don't need to copy everything on the hard drive. The yum.conf file will have the appropriate entries to find the FC2 files.

If you don't have your master node on the network but installed from a DVD, then edit the file /etc/yum.conf so that the base and updates-released entries look like the following (make sure it is mounted when running yum):

[base] name=.... baseurl=/mnt/cdrom/Fedora/RPMS [updates-released] name=... baseurl=/mnt/cdrom/Fedora/RPMSIf your master node is connected the Internet, then you don't need to copy everything on the hard drive or use the FC2 DVD. The /etc/yum.conf file will have the appropriate entries to find the FC2 files.

Capturing the Warewulf

Now let's go get the Warewulf files from the Internet. Go to the following URL in your web browser (or use wget if you can not start a browser), and grab the following file:

warewulf-2.2.4-2.src.rpmThen go to the following URL and grab the following file:

perl-Term-Screen-1.02-3.caos.src.rpmThen go to the following URL and get the file,

dev-minimal-3.3.8-4.caos.i386.rpmPut the files, warewulf-2.2.4-1 and perl-Term-Screen-1.02-2.caos.src.rpm into the directory /usr/src/redhat/SRPMS. Put the file dev-minimal-3.3.8-4.caos.i386.rpm into the directory, /usr/src/redhat/RPMS/i386.

Next, go to the directory, /usr/src/redhat/SRPMS and run the following commands,

% rpmbuild --rebuild warewulf-2.2.4-1.src.rpm % rpmbuild --rebuild perl-Term-Screen-1.02-2.caos.src.rpmThese commands build the rpm's that Warewulf will need for installation.

We should be about ready to go, but first we need to create a yum repository for the Warewulf files so that we can easily install them. Run the following command as root,

% yum-arch -z /usr/src/redhat/RPMS

Next we need to let yum know where to find the new files. In the file /etc/yum.conf we need to yum where the Warewulf repository is located. After the entries for [updates-released] we will add an entry that looks the following:

[warewulf] name=warewulf baseurl=file:///usr/src/redhat/RPMS/

One last thing. If you copied the FC2 rpm's to your hard drive, you will have to build the yum repository for it. Just run the following command as root.

% yum-arch -z /usr/src/RPM_LOCAL

Yuming It Up

You will need to install the perl-Term-Screen rpm on the master node first. However, since we have configured the yum repositories, this is very easy do to do. Just use yum to install it for you by entering:% yum install perl-Term-ScreenAt the end of a long list of header files, you should see something like (enter "y"):

I will do the following: [install: perl-Term-Screen 1.02-2.redhat.noarch] Is this ok [y/N]:

Now we're ready to install Warewulf. Enter the following:

% yum install warewulfLike the perl-Term-Screen above, yum will report that it is doing and then ask something similar to (enter "y"):

I will do the following: [install: warewulf 2.2.4-1.i386] I will install/upgrade these to satisfy the dependencies: [deps: warewulf-tools 2.2.4-1.i386] [deps: dhcp 2:3.0.1rc14-1.i386] [deps: tftp-server 0.33-3.i386]In this case, yum is installing some other package(s) on which Warewulf depends. For example, if you didn't install dhcp then it will be installed as part of installing Warewulf.

Almost There

We're almost there -- only a few more steps. The nodes in a Warewulf cluster can boot a couple of ways. We're going to have them boot using PXE boot. Consequently we need to use syslinux to get a PXE capable boot loader. However, this is really easy to do. Enter the following commands from the head node:

% wget http://www.kernel.org/pub/linux/utils/boot/syslinux/Old/syslinux-2.11.tar.gz /tmp % cd /tmp % tar xzvf syslinux-2.11.tar.gz % cd syslinux-2.11You will see a file called pxelinux.0. Copy this file to /tftpboot.

% cd /tmp/syslinux-2.11 % cp pxelinux.0 /tftpboot

Before we start up Warewulf we need to edit the configuration files to match our cluster. The configuration files are located in /etc/warewulf on the master node. The first file we will edit is /etc/warewulf/master.conf. At the top of the file you will need to change lines to reflect the following:,

nodename = kronos node-prefix = kronosThis tells Warewulf that the nodes will know the master node as kronos and the nodes will have the "kronos" prefix. By the way, feel free to name your cluster to what ever you want. We also need to make these changes:

node number format = 02d boot device = eth2 admin device = eth2 sharedfs device = eth2 cluster device = eth1This tells Warewulf what interfaces are used for what functions and keeps the number prefixes short. Next we need to edit /etc/warewulf/vnfs.conf to reflect the following:

kernel modules = modules.dep sunrpc lockd nfs jbd ext3 mii crc32 e100 e1000 bonding sis900In this case we are adding only the kernel modules that we might need.

The last file we need to edit is /etc/warewulf/nodes.conf. This file tells Warewulf how the nodes are configured. Edit the file to reflect these changes:

admin device = eth0 sharedfs device = eth0 cluster device = eth1 users = laytonjb, deadlineAgain, we are telling Warewulf which interfaces handle what type of traffic. We also set the user names -- of course set this to your specific names. Finally "un-comment" (remove the "#") the following line:

# pxeboot = pxelinux.0This change tells Warewulf that the nodes will be booting using PXE.

Really Almost There

Now we are ready to configure Warewulf on the head node. This task is actually very easy to do. Just run the following command.

% wwmaster.initiate --initIf everything is setup correctly, you should be able to just hit "Enter" each time the script asks for input. This script does a number of things. It starts the Warewulf daemon on the head node, it configures NFS exports for the compute nodes, it starts dhcp on the head node, and it will create the VNFS in /vnfs/default. It also creates a compressed image that is sent to each node. You will see a lot of yum output when the VNFS is building. When wwmaster.init is finished, the image file is placed in /tftpboot/generic.img.gz.

Kronos Lives

At this pint none of the worker nodes should be powered up. Another very nice thing about Warewulf is that you can just turn on the nodes and Warewulf will find the nodes, configure DHCP , boot the nodes using PXE, and send the ramdisk image.

The command to start the process is wwnode.add. Just run the following command on the master node.

% wwnode.add --scanThis command will keep running forever, so when we're done, you will need to use Ctrl-C to stop it.

Once the command starts, Warewulf is listening for DHCP requests from new nodes to come up on the cluster network. So, after you run the command power up the first node first node and watch the screen. When Warewulf finds a node, it will look something like the following.

Scanning for new nodes.................. ADDING: '00:0b:6a:3d:71:cd' to nodes.conf as [kronos00] Re-writing /etc/dhcpd.conf Shutting down dhcpd: [ OK ] Starting dhcpd: [ OK ] Shutting down warewulfd: [ OK ] Starting warewulfd: [ OK ] Scanning for new nodes. ISPY: ':00:0b:6a:3d:71:cd' making DHCP requestOnce this happens open another window and try pinging the node.

% ping kronos00When the ping is successful, the node should be up and ready. Try using rsh to the node to see what the node looks like.

% rsh kronos00

Be sure to run the df -h command to see how much space is used by the ramdisk (it's not much at all). Repeat this process for the second node and be sure to wait for the node to come up. Then boot the third node, and so on. After the last node is done, stop wwnode.scan by entering a Ctrl-C. It may also be useful/interesting to place a monitor on the compute nodes as they boot.

You will only have to run wwnode.add -- scan once for the cluster. Call it "node discovery" if you like. After this, you will only have to turn the node on and it will boot via PXE and get its image from the master node.

At this point, it maybe best to check and make sure warewulfd daemon is is running. As root, enter /usr/sbin/warewulfd. If warewulfd is running it will let you know.

Next, to allow user access to the nodes run wwnode.sync. And finally, enter wwtop. You should see all you nodes listed in a nice "top like" display. LEt us know if you have any questions. We will pick up there in part three.

| Sidebar Resource: |

| Warewulf |

| Fedora |

Douglas Eadline is the