Ever hear of an exabyte?

In our second part of File Systems O'Plenty we take a look at NAS, Distributed File Systems, AoE, iSCSI, and Parallel File Systems. In case you missed part one you can find it here. In this part, we will also point out why IO is important in HPC clustering. Many a CPU cycle is wasted waiting for that data block. Read on how to feed you data appetite.

[Editors note: This article represents the second in a series on cluster file systems (See Part One: The Basics, Taxonomy and NFS and Part Three: Object Based Storage). As the technology continues to change, Jeff has done his best to "snap shot" the state of the art. If products are missing or specifications are out of date, then please contact Jeff or myself. In addition,, because the article is in three parts, we will continue the figure numbering from part one. Personally, I want to stress magnitude and difficulty of this undertaking and thank Jeff for amount of work he put into this series.]

NAS

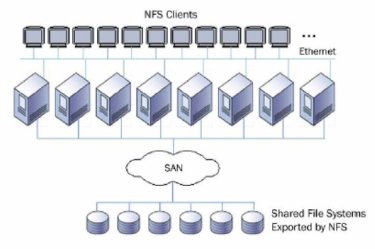

In the previous section I talked about NFS including its history and recent developments, but it is just a protocol and a file system. Vendors have taken NFS and put storage behind it to create a device that is commonly called a NAS (Network Attached Storage) device. Figure Five shows you the basic layout of a NAS device.

The compute nodes at the top connect to a NAS Head, sometimes called a filer head, through some type of network. The filer head is attached to some kind of storage. The storage is in the device itself or attached to the filer head, possibly through SAN storage or through some other network storage device such as iSCSI or HyperSCSI. The filer head exports the storage at the file level typically using NFS or in some cases, CIFS (usually for compatibility with desktops). There are a large number of manufacturers of NAS systems. For example,

You can even create your own NAS system using a simple PC running Linux and stuffing it full of disks. Many people have done this before (I have done it in my own home system). You can use software RAID or hardware RAID with the disks and then use whatever network connection you want to connect the NAS to the network. Using NFS or CIFS (Samba) allows you to export the file system and mount it on the clients. The only cost is the hardware and your time since NFS is part of Linux and is a standard (the fact that it's a standard is not to be understated). However, home NAS boxes may not be the best solution for larger clusters.While NAS devices are "easy" in the sense that they have been around for a long time and are easy to understand, easy to manage, and easy to debug, they are not without their problems and limitations. The first limitation is that a simple single NAS can only scale to a moderate number of clients (10's or at most 100's of clients) before performance becomes so poor that you're better offer using smoke signals or drums to send data. Second, the scalability in terms of capacity is somewhat limited. In the case of a small NAS box (or one that you put together), you are limited to how many drives you can stuff into a single box. If you use something like LVM, then you can add storage at a later date and expand the file system to use it. With 1TB hard drives here, you should be able to create a very large file system on the NAS (for example, it should be fairly easy to put about 8-10 drives in a single case which will give you on the order of 8-10 TB's of raw storage if you use 1 TB drives (at last! Room enough for my K.C. and the Sunshine Band MP3s!). But, heed the warning about data corruption that I previously discussed.

To help improve NAS devices, NAS vendors have developed large, robust storage systems within their offerings. They have taken the basic NAS concept and put some serious hardware behind it. The have support for large file systems. They have also developed hardware that helps improve the storage performance behind the filer head allowing more clients to use the storage. For the most part NAS vendors use a single GigE or multiple GigE lines from the filer head to the client network. This limited network connection to the clients, restricts the amount of data they can push from the filer head to the clients. With the advent of NFS-RDMA, as discussed previously, it should be possible to push even more data from a single filer head (Mellanox is saying that you can get 1.3 GB/s reads and 600 MB/s writes from a single NFS/RDMA filer head), but you have to have an InfiniBand network to the clients. Despite the increased IO performance from NFS-RDMA, there is still a limit on the total amount of data than can be sent/received from a single filer head.

In addition to be limited in performance scaling, NAS devices are also limited in capacity scaling. If you run out of capacity (space) NAS devices can be expanded if you are below the capacity limit of the device. But if you hit that limit then you cannot expand the capacity. In that case, you have to add another NAS device that is separate from the current NAS device(s). But, the file systems on all of the NAS devices cannot be combined into a single file system. So now you have these "islands" of storage. What do you do?

One option is to arrange the NFS mounts on the client nodes to make it "appear" as a single file system. In essence you have to "nest" the file systems. But these tricks can be risky and if one NAS device isn't available you typically cannot get to the other devices. This can also lead to a load imbalance.

The other option is to split the load across several filer heads. You can split the load based on the number of users, the activity level of the users, the number of jobs, the amount of storage, or just about any combination you can think of to help load balance the storage. Even if you plan ahead when you get your first NAS device, you will find that how you planned to split the load will still result in a load imbalance. The fundamental reason is that the load, the number of users, the size of the projects, etc. will change over time and once you have planned the load distribution, you can't change it. At this point one option is to take down the storage, move the data around to better load balance, and then bring the system back up. If the users don't surround your desk armed with pitch forks and torches, this process can work. However, you may have to do it fairly often depending upon how the load changes and how fast you can run.

Now that I've exposed the warts of NAS devices, the next logical questions is when should you use them and when should you not. I'll give you my stock answer - it depends. You need to look at 3 things: (1) Size of the cluster, (2) IO requirements of the application(s), and (3) capacity requirements. If the size of your cluster isn't too big so that the NAS device can support all of the clients, then you've satisfied the first requirement. I've seen clusters as large as several hundred nodes that can be effectively served by a NAS box.

The second requirement is the IO needs of your applications. You need to know how much IO your applications require for good performance (you can define "good" however you want). For example, if you need 20 MB/s from 20 nodes to maintain a certain level of performance then you need to make sure the NAS box can deliver this amount of IO throughput. The interesting thing is that I've seen people who have absolutely no idea how much IO their application needs to maintain a certain level of performance yet they will specify a certain amount of IO performance in their cluster. I have also seen people who think they know the IO requirements of their codes but they actually don't, and they also specify the amount of IO for their cluster. You would be surprised by the number of applications that don't need much IO or don't need the global IO that NAS devices provide. I highly recommend taking some time to test your applications and learn their IO requirements and patterns.

And finally, if you think your overall capacity requirements are below the maximum that the NAS devices offer, then it should work well. But be sure to estimate your storage capacity for the life of the machine (about 3 years). Also, remember to factor in that the amount that storage increases every year.

I hate to give you rules of thumb for all 3 requirements for NAS usage because as soon as I do, someone will come up with a counter example that proves me wrong. I have my own rules of thumb for a range of applications and so far they have worked well. But there are some application areas I don't know as well so I'm not sure if my rules will apply or not. I highly advise that you learn your applications and develop your on rules of thumb. When you do, please send me some email (address is at the end of the article) and tell me about your rules of thumb.

Before I finished with NAS devices, I wanted to give a short summary with some pros and cons for them.

- Pros:

- Easy to configure, manage (Plug and Play for the most part)

- Well understood (easy to debug)

- Client comes with every version of Linux

- Client is free

- Can be cost effective

- Provides enough IO for many applications

- May be enough capacity for your needs

- Cons:

- Limited aggregate performance

- Limited capacity scalability

- May not provide enough capacity

- Potential load imbalance (if use multiple NAS devices)

- "Islands" of storage are created if you have multiple NAS devices

If it looks like a simple NAS box won't meet your requirements, the next section will present an approach to NAS that tries to improve the load imbalance problem and to help the scalability.

Clustered NAS

Since NAS boxes only have a single server (single filer head), Clustered NAS systems were developed to make NAS systems more scalable and to give them more performance. A Clustered NAS uses several filer heads instead of a single one. Typically either the filer heads are connected to storage via a private network or the storage may be directly attached to each filer head.

There are two primary architectures for Clustered NAS systems. In the first architecture, there are several filer heads that have some storage assigned to them. The other filer heads cannot access data not associated with their filer head, but all of the filer heads know which one has which data. When a data request from a client it comes into a filer head. The filer head determines where the data is located (which filer head). Then it contacts the filer head that owns the data using a private storage network. The filer head that owns the data retrieves the data and sends it over the private storage network to the originating filer head which then sends the data to the client.

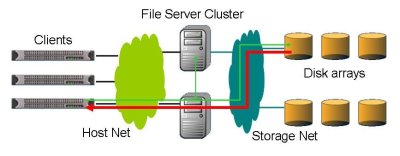

Figure Six below, illustrates this process of getting data to a client in a Clustered NAS environment.

The green line represents the data request from the client. It goes to the filer head that it has mounted. That filer head checks if the requested data is in its attached storage. In this case it is not, so it forwards the request to the filer head that owns the data. This filer head then retrieves the data and sends it back to the originating filer head (the red line). The originating filer head then sends the data back to the client. This process is true whether the data function is a read or a write.

This Clustered NAS architecture, sometimes called a forwarding model, was one of the first clustered NAS approaches. It was a fairly easy approach to develop since it's really several single head NAS devices that know about the data that are owned by each filer head. The metadata needs to be modified so that each filer head knows where the data is located. Basically an NFS data request is made from the originating filer head to the filer head that owns the requested data. The data is returned to the originating filer head which then forwards it to the requesting client. Figure Seven below illustrates this process from a file perspective (don't forget that NFS views data as files).

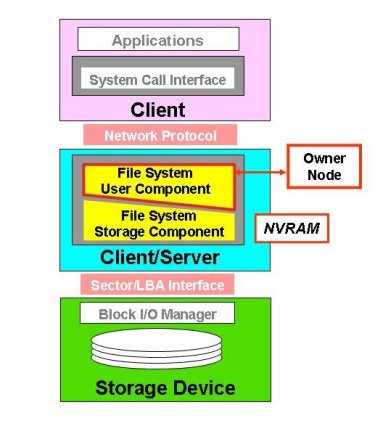

In Figure Seven, the client requests 3 files (the triangles). The files are placed on two different filer heads. So the data requests are fulfilled by two different filer heads. But, regardless, all of the data has to be sent through the originating filer head and could limit performance. Also notice that this is still an in-band data model, as shown in Figure Eight below.

Recall that in an in-band data model, the data has to flow through a single point including metadata. But in the forwarding model, when the client request gets to the user component of the file system layer the metadata is checked for the location of the data. Then the data request is sent to that filer head (called a node in Figure Eight). However, the stack is still an inband model where all of the data has to flow through a single server.

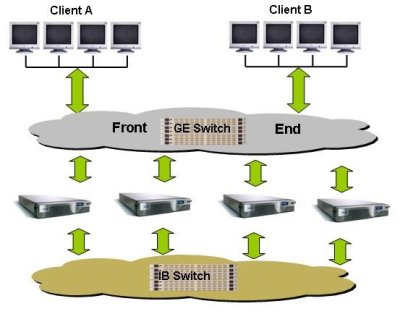

In a second architecture, sometimes called a Hybrid architecture, the filer heads are really gateways from the clients to a parallel file system. For these types of systems, there are filer heads (gateways) that communicate with the client using NFS over the client network but access the parallel file system on a private storage network. The gateways may or may have storage attached to them depending upon the specifics of the system. Figure Nine below illustrates the data flow process for this architecture.

In this model, the client makes a data request that is sent to a file server node which is really a gateway node. The gateway node then gathers the data from the storage on behalf of the requesting client (this is shown as the green line in Figure Nine). Once all of the data is assembled for the client, it is sent back to the client from the originating gateway node (this is show in red in Figure Nine). Contrast this figure with Figure Six and you can see how the hybrid model differs from the forwarding model.

The Hybrid Architecture of a Clustered NAS is a bit different from the forwarding architecture from the perspective of the storage as well. Figure Ten below shows how the data are stored and how it flows through the storage system.

The client requests the data from one of the file servers. The originating file server than gets the data from the storage, assembles it, and then returns it to the requesting client. But there is a significant difference in how the data is stored in this architecture. The data is actually stored on storage hardware that is not necessarily assigned to one of the file servers. You can see this in Figure Ten. The data are distributed in pieces across the storage, unlike the forwarding model where the entire file was stored with one file server or another.

A benefit of the Hybrid architecture is that it allows the originating file server to retrieve the requested data in parallel, speeding the data retrieval operation, particularly compared to the Forwarding Architecture. But, the file servers still send data to the clients using NFS and most likely using GigE. So the client performance is still limited by their NFS performance.

The Hybrid architecture has another benefit in that the file system capacity can be scaled fairly large by just adding storage servers to the storage network. In addition, you can gain aggregate performance by just adding more gateways to the file system (basically you are adding more file servers).

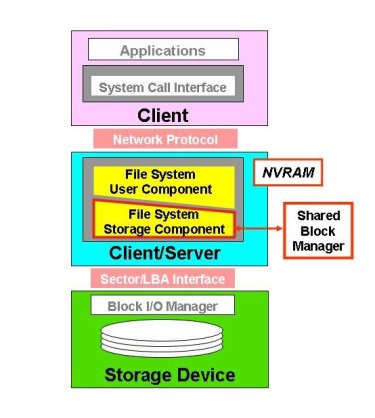

However, despite the ability to scale capacity and to scale aggregate performance there are a few difficulties. Figure Eleven below is the protocol stack for the hybrid model.

The data requests from the client come down the stack until they reach the File System Storage Component layer. Then the data request is sent through a shared block manager so that the data can be retrieved from the various storage devices. Then the data is sent back up the stack to the client.

From Figure 11 you can see that the Hybrid architecture is still an in-band architecture with data that has to flow through a single server to the client. While the Hybrid Clustered NAS can scale performance and capacity there are still limits to performance. Each client only communicates with one server. Consequently, there is no ability to introduce parallelism between the client and the data. There is parallelism from the file server to the file system, but there is still a bottleneck from the file server to the client. More over, you are still using NFS to communicate with the client and the file server (gateway). This limits the possible performance of the client to approximately ~90-100 MB/s per client over GigE. However, if you have fewer gateways than clients, this number is reduced because you have multiple clients contacting a single gateway. For example, if you have 128 clients and only 12 gateways and the clients are all performing IO, then the best per client bandwidth when all clients are performing IO is approximately 9.4 MB/s. The only way to improve performance is to greatly increase the number of gateways, increasing costs. On the plus side, many applications don't need much IO performance so a low gateway/client ratio may work well enough.

There are some inherent difficulties in a hybrid architecture though. Most of them are due to the design decisions in a Hybrid Clustered NAS. For example, the storage layer must synchronize the block-level access among the gateways that share the file system. This requirement means that there will be a high level of traffic on the storage network and the gateways will have to spend a fair amount of time on the synchronization as there are a large number of blocks to handle. On a 500GB device, there are about 1 Billion 512-byte sectors. On 50TB, there are 100 Billion sectors. On a 500TB system there about 1 Trillion sectors. And one final difficulty is that the low-level interface imposes more overhead in a distributed system. For example a write() system call involves several block-level IO operations because the storage is distributed and the metadata has to be updated globally. This can restrict write traffic.

But on the bright side, there is a very "narrow" interface around block ownership and allocation. This interface is some restricted but it's fairly easy to modify existing local file systems into a parallel file system or a Clustered NAS. For example XFS was used to create cXFS. Terrascale, now called Rapidscale, initially did this with ext3, and then XFS.

As with the simple NAS device, I want to summarize the Clustered NAS, whether it use a Forwarding architecture or a Hybrid architecture, with some pros and cons:

- Pros:

- Usually a much more scalable file system than other NAS models

- Only one file server is used for the data flow (forwarding model could potentially use all of the file servers)

- Uses NFS as protocol between client and file server (gateway)

- Many applications don't need large amounts of IO for good performance (can use a low gateway/client ratio)

- Cons:

- Can have scalability problems (block allocation and write traffic)

- Load balancing problems

- Need a high gateway/client ratio for good performance

Now that we've explored the two types of Clustered NAS devices, let's

explore some of the specific vendor offerings for both types.

Polyserve

Polyserve, which was recently bought by HP, is a clustered NAS vendor that has focused on HPC, web serving, and databases. Their file serving product for Linux, called Polyserve File Serving Utility can have up to 16 file serving nodes with a file system (called the Polyserve Cluster File System - PSFS) behind them. The file system is typically built using SAN hardware. Polyserve's Clustered NAS is a symmetric design where each of the file serving nodes provides all services (metadata, client, and data server). It follows the hybrid design where a single file server acts as a gateway node for the client and gathers the data for the client. In essence they are gateway nodes from the client to the file system.The layout is fairly simply. You have a number of file servers (up to 16), that are plugged into the client network using TCP connections. Then the file servers are plugged into a SAN storage network so they have shared storage. Figure Twelve below shows the basic layout:

Typically, the file servers are plugged into the network using a single GigE connection and then connected into a Fibre Channel (FC) network for the storage. Polyserve supports Qlogic and Emulex HBA's for the FC network and supports RHEL and SLES on the file servers.

To achieve the symmetric architecture, PSFS uses a symmetric distributed lock manager. A lock manager is important for ensuring that the cache across all of the servers is kept consistent. In PSFS, all of the servers participate in coordinating locks across the storage and the load is balanced as best as possible to reduce bottlenecks. If a node fails, the locks that it was coordinating are moved to other servers. You can also add servers (maximum total is 16), while the file system is in use with no data migration required. The same is true for adding storage

The clients access Polyserve using NFS. In tests, Polyserve says that they get about 120-123 MB/s per server. So for 16 servers you could up to 2 GB/s in aggregate.

Polyserve also has its own volume manager called Cluster Volume manager (CVM). It allows storage volumes to span multiple arrays and stripe data across multiple LUNs or arrays. PSFS supports 128 LUNs per volume, 216 volumes per cluster and 512 file systems per cluster with each file system being limited to 128 TB. So you can scale PSFS to a very large file system. You can also add storage to existing LUNs to grow the storage space within the limits mentioned previously.

Isilon IQ

Isilon is also a clustered NAS solution that can be used for HPC and clusters. The design of Isilon IQ was configured such that you can scale the capacity (amount of storage) and the aggregate performance independent of one another. So if you didn't need as much performance but needed more storage, Isilon IQ allows you to scale capacity. You can also add more gateways to the cluster to provide a larger aggregate throughput (but you are ultimately limited in performance to one gateway server per client).

Since Isilon is a cluster NAS solution, it is organized in a fashion similar to Polyserve. The Isilon file system, called OneFS, is a parallel file system that allows you to add storage servers to expand the file system. But even as you add storage servers, you still have a single name space. But in contrast to Polyserve, you can add as many gateway nodes (file servers) as you want or need. Figure Thirteen is a diagram of an Isilon layout:

The Isilon servers are called "IQ". The model number of the IQ product is determined by the size of the disks in the server. Each IQ box has 12 hot-swappable disks. Currently the IQ 1920i uses 160 GB SATA drives (1.92 TB usable space), the IQ 3000i uses 250 GB SATA drives (3.00 TB usable space), the IQ 6000i uses 500 GB drives (6.0 TB usable space), and the IQ 9000i uses 750 GB drives (9.00 TB of usable space). Each IQ unit is 2U high and has two outgoing GigE connections (only one is used and the other one is a failover), and 2 InfiniBand connections to the file system network (again, only one is used and the other is a fail over connection). Each server has 4 GB of memory and 512 MB of NVRAM (Non-volatile RAM).

Isilon uses InfiniBand for the storage network to get the best performance out of OneFS, but the clients still connect to Isilon using a single GigE connection and NFS. Isilon also has two GigE connections and 2 IB connections so if one link fails, another can take over.

You have to start with a minimum of 3 servers (for robustness reasons). So the smallest Isilon setup you can have is three IQ 1920i servers for a total of 5.7 TB of usable space. You can add as many servers as you want to increase capacity and to increase performance. You can expand the IQ 6000i and the 9000i by attaching SAS storage units to the server. This feature allows you to scale capacity without having to scale performance.

Isilon also has something they call an accelerator. This box is similar to the storage boxes, but it does not contain any storage. But it does allow you to get more gateways to the file system. So by adding accelerators, you can scale the aggregate performance without having to add capacity.

When data is written to the Isilon storage, the data is striped across multiple servers, allowing for increased performance on the file system side of the gateways. Isilon also has support for N+1, N+2, N+3, N+4, and mirroring data for improved resiliency on the file system. N+1 is what is normally RAID-5 and N+2 is RAID-6. So N+3, and N+4 mean that you can tolerate the lose of 3 or 4 drives respectively without the lose of data. However, these schemes require much more computational power and take up much more of the available capacity.

Isilon IQ also comes with tools to monitor and configure the file system. This includes tools for replication of data, snapshots, NFS failover, migration tools, and quotas.

Other Distributed File Systems

We've talked about NFS, NAS devices, and Clustered NAS devices under the classification of Distributed File System. But there are other Distributed File Systems that bear mentioning for clusters. They are a bit different than what I've presented so far, but they can be very useful for clusters as well.

AFS

As I mentioned in the previous sections, the current form of NFS has some limitations. One of this is scalability. A single NFS server can't support too many clients at the same time without performance grinding to a halt. And before NFSv4, NFS had some security concerns. As an alternative, the Andrew File System (AFS) was created to allow thousands of clients to share data in a secure manner.

History of AFS:

The Andrew File System or AFS grew out of the Andrew Project that was a joint project between Carnegie Mellon University and IBM. The focus of the project was to develop new techniques for file systems, particularly in the area of scalability and security. While it was something of an academic project, AFS became a commercial product from the Transarc Corporation. Eventually IBM bought Transarc and offered and supported AFS. Recently IBM forked the source of AFS and made it available with an open-source license for the community to develop. This version is called OpenAFS.

AFS is a true distributed file system in that the data is distributed across all of the AFS servers. When a client needs a file, the whole or part is sent from a server and cached on the client. Users appear to get local performance when accessing the file more than once. AFS keeps track of who is accessing or changing the file and each client is notified if the file has been changed.

More about AFS:

The fundamental unit in AFS is called a cell. On a client, AFS appears in a file tree under /afs. Under this file tree, you will see directories that correspond to AFS cells. Each cell is composed of servers that are grouped together. So for instance, if you had two cells, cell1 and cell2, you would see them on the clients as /afs/cell1 and /afs/cell2.

AFS is somewhat similar to NFS in that it was a client-server architecture so you can access the same data on any AFS client, but is designed to be more scalable and more secure than NFS. Rather than be limited to a single server as NFS is, AFS is distributed so you can have multiple AFS servers to help spread the load throughout the cluster. To add more space, you can easily add more servers and the cells will appear under /afs which makes the file system appear as a single name space. This is more difficult, if not impossible to do with NFS.

In general, the primary strengths of AFS are:

- caching

- security

- scalability

The first strength of AFS is that the clients cache data (either on a local hard disk or in memory). When a file is requested by a client, the request is satisfied by a server and placed in the local cache of the client (again - either memory or local hard drive space). Any data access after that is handled by the local cache. Consequently, file access can be much quicker than NFS. When the file is closed, the modified parts of the file are copied back to the server. AFS also watches over the details of how the data is updated in the client's cache so that if another client wants to access the same data, this new client has the latest and greatest version of the data.

However, this process does require a mechanism for ensuring file consistently across the file system, particularly cache consistency. Cache consistency is maintained by the server coordinating any changes and notifying the clients that are using the file. This process can cause a slowdown with shared file writes so AFS is not a good candidate for shared database access and may have problems with MPI-IO to a shared files. However, if a server goes down, then other clients can access the data by contacting a client that has cached the data. But while the server is down, the cached data can not be updated (but it is readable). The caching feature of AFS allows it to scale better than NFS as the number of clients is increased.

Security was also one of the design tenants of AFS. Kerberos is used to authenticate users. Consequently, passwords are encrypted and are no longer visible. Moreover AFS uses Access Control Lists (ACLs) so that users can even be restricted to their own directories.

One of the major objectives of AFS was to greatly improve scalability compared to other network file systems such as NFS. There have been reports of deployments with up to 200 clients per server. However, AFS experts recommend about a 50 client to server ratio. But one of the most significant scalable features of AFS is that if you need more servers, you can add them to an active file system. This is something that NFS cannot do.

Another feature of AFS is the concept of a volume. A volume is AFS-speak for a tree of files, subdirectories, and AFS mount points (links to other AFS volumes). These volumes are created by the administrator and are used by the system users without regard for where the file is physically located. The is a really useful feature of AFS that allows the administrator to move volumes around, for example to another server or another disk location, without the need to notify users. It can even be done while the volumes are being used. This means you don't have to take the file system off-line to adjust volumes or add servers (you can't do this with AFS).

Currently you have to run AFS over an IP network because it uses UDP as the network protocol. UDP is a lossy protocol and can lead to problems on congested networks. On the OpenAFS roadmap for high performance computing (HPC), the developers of AFS are looking to add support for TCP. This will improve the performance of AFS and should also make for a more robust file system.

More information on AFS can be found at OpenAFS.

iSCSI

With the advent of high-speed networks and faster processors, the ability to centralize storage and allocate it to various machines on the network has become standard practice for many sites. SAN systems use this approach but typically use expensive and proprietary Fibre Channel (FC) networks and in some cases proprietary storage media. An open initiative to replace the FC network with common IP based networks and common storage media was begun in response to SANs. This initiative, called iSCSI (internet SCSI), was developed by the Internet Engineering Task Force (IETF).

While iSCSI is not strictly a file system I believe it is important enough to include in this survey. The simple reason being that it is a very important protocol because it is an industry standard and it is very flexible.

iSCSI encapsulates SCSI commands in TCP (Transmission Control Protocol) packets and sends them to the target computer over IP (Internet Protocol) on an Ethernet network. The system then processes the TCP/IP packet and processes the SCSI commands contained in the packet. Since SCSI is bi-directional, any results or data in response to the original request are passed back to the originating system. Thus a system can access storage over the network using standard SCSI commands. In fact, the client computer (called an initiator) does not even need a hard drive in it at all and can access storage space on the target computer, which has the hard drives, using iSCSI. The storage space actually appears as though it's physically attached to the client (via a block device) and a file system can be built on it.

The overall basic process for iSCSI is fairly simple. Assume that a user or an application on the initiator (client) makes a request of the iSCSI storage space. The operating system creates the corresponding SCSI commands, then iSCSI encapsulates them, perhaps encrypting them, and puts a header on the packet. It then sends them over the IP network to the target. The target decrypts the packet (if encrypted) and then separates out the SCSI commands. The SCSI commands are then sent to the SCSI device and any results of the command are returned to the original request. Since IP networks can be lossy where packets can either be dropped, or have to be resent, or arrive out of order, the iSCSI protocol has had to develop techniques to accommodate these and similar situations.

There are several desirable aspects to iSCSI. First, no new hardware is required either by the initiator (client) or the target (server). The same physical disks, network cards, and network can be used for an iSCSI network. Consequently the start-up costs are much less than a SAN. Second, iSCSI can be used over wide area networks (WANs) that span multiple routers. SANs are limited to their distance based on their configuration. Plus, IP networks are much cheaper than FC networks. Also, theoretically, since iSCSI is a standard protocol, you can mix and match initiators and targets across various operating systems.

There are several Linux iSCSI projects. The most prominent is an iSCSI initiator that was developed by Cisco and is available as open-source. There are patches for 2.4 and 2.6 kernels, although the 2.6 kernel series has iSCSI already in it. Many Linux distributions ship with the initiator already in the kernel. An iSCSI target package is also available, but only for 2.4 kernels (this package is sometimes called the Ardistech target package). It allows Linux machines to be used as targets for iSCSI initiators. There is also a project originally developed by Intel and is available as open-source.

A fork of the Ardistech iSCSI target package was made a with an eye towards porting it to the Linux 2.6 kernel and adding features to it (the original Aristech iSCSI target package has not been developed for some time). Then this project was combined with the iSCSI initiator project to develop a combined initiator and target package for Linux. This package is under very active development and fully supports the Linux 2.6 kernel series. Finally, the most active package is the iSCSI Enterprise Target project.

There is a very good HOWTO on how to use the Cisco initiator and the Ardistech target package in Linux. There is also an article on how to use iSCSI as the root disk for nodes in a cluster. This could be used to boot diskless compute nodes and provide them with an operating system located on the network.

iSCSI is so flexible that you can think of many different ways to use it in a cluster. A simple way would be to use a few nodes with disks within a cluster as targets for the rest of the compute nodes in the cluster that are the initiators. The compute nodes can even be made diskless. Parts of the disk subsystem on each target node would be allocated to a compute node A separate storage network can be utilized to increase throughput of iSCSI. The compute node can then format and mount the disk as though it were a local disk. This architecture allows the storage to be concentrated in a few nodes for easier management.

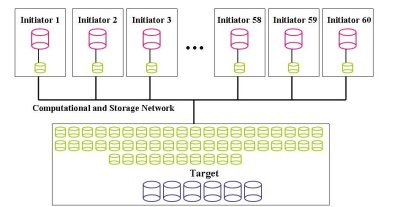

Let's look at some other examples. In the first one, let's assume we have a single target machine with six, 500 GB SATA drives. In Figure Fourteen the target nodes are at the bottom (remember that the targets are the data servers) and the SATA drives are shown in blue.

Also, let's assume that each initiator node gets 50 GB of storage. This configuration means that the target node will export 60 block devices that are 50 GB partitions of the six drives. In Figure Fourteen these are shown in green in the target node. Let's also assume that the block devices are exposed over a TCP network (at least GigE) that is shared with the computational traffic. This means that we can have up to 60 initiator nodes where each initiator uses one block device from the target node. Then the initiator nodes format a file system on its particular block device. In Figure Fourteen the block devices for the initiator are shown in green and the resulting local storage with a file system is show in red. This configuration is a basic configuration in that there is really no resiliency included in the design. However, it does give you the most usage of the disks in the target node.

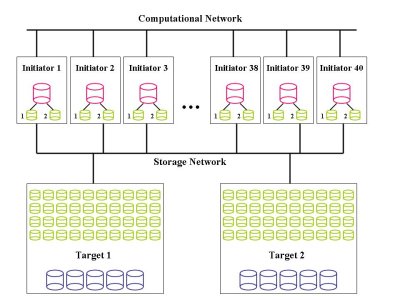

We can modify the basic configuration of Figure Fourteen to add some performance or some resiliency. Figure Fifteen is the same basic configuration as Figure Fourteen but the drive configuration on the target node is different. In this case we can take a pair of disks and combine them using software RAID. For performance we would combine them with RAID-0 (simple striping) and for resiliency we could combine them with software RAID-1. Figure Fifteen illustrates the drives being combined with RAID-0.

We use RAID-0 (stripping) on pairs of disks, resulting in 1TB of usable space on the pair. Using RAID-0 also gives us some extra performance. Then you break each pair into 20 block devices that each have 50 GB of space. This can be done using lvm. With six drives, this also gives you 60 block devices, one for each initiator.

This approach gives you the same number of block devices as the basic approach but you have some extra speed in this case because the underlying disks are using RAID-0. But it also means that the target node has to manage 20 md devices. However, in the interest of performance we have sacrificed resiliency.

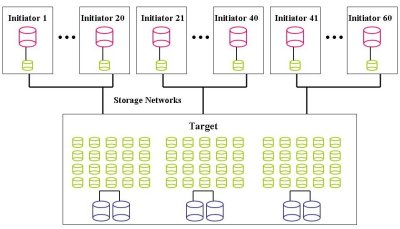

Let's look at one last example in Figure Sixteen that adds some resiliency and performance to a basic configuration. Let's assume we have two iSCSI targets called Target 1 and Target 2 that only provide iSCSI storage space and are not used as a compute node. Each target node has five 500 GB drives configured in RAID-5 that are shown in blue in Figure Sixteen. It would probably be best to use hardware RAID for this configuration but you could use software RAID. For each Target, it works out to roughly about 2 TB of storage space (total of 4 TBs).

Each target node uses lvm to break the 2 TB storage space into 40 block devices of 50 GB each. In Figure Sixteen the block devices are shown in green. Remember that with iSCSI you don't put a file system on these block devices. These block devices are exposed to the initiators over a dedicated storage network as shown in Figure Sixteen. From each target machine there are 40 block devices that are from a RAID-5 group

Each initiator (client) uses a block device from Target 1 and a block device from Target 2. Then we can use software RAID (md) to combine the two block devices using RAID-1, resulting in the device, /dev/md0. Then /dev/md0 can be formatted with a file system. This is shown in red in Figure Sixteen.

There are several points of resiliency in this configuration. First, the target nodes are using RAID-5 which allows them to lose one disk without any lose of data. If it is well thought out, it could even use hot-swap drives. Second, there is a separate network for iSCSI. While not likely, if the computational network goes down the storage network could still be functioning (although this might be pointless). The storage network also allows increased speed because you are not combining storage and computational traffic on a single network. Third, the initiator nodes are using RAID-1 for their block devices. This means that if a block device fails for some reason, there is no lose of data. Fourth, the initiator nodes are using the two block devices from separate nodes. Therefore, an entire target node could go down without lose of data in the initiators.

So you can see that iSCSI gives you a lot of flexibility in configurations. You may pay a performance penalty because of the network, but if you need flexibility, iSCSI offers it. I have even designed some RAID-5 configurations so that each initiator mounts a block device from at least 5 different machines that are used in a md RAID-5 block device. This gives RAID-5 on the target machine and on the initiator. Since md supports RAID-6, it is also possible to form RAID-6 block devices in initiators.

There are lots of other options. You can take two target machines and use HA (High Availability) between them to tolerate a loss of a node without losing any data or up-time. You can also use multiple GigE networks (GigE cards are cheap) and run different block devices across each one. This adds resiliency and performance. Adding in resiliency and performance can raise the cost of the storage system but you are adding performance and resiliency.

Hopefully from these few examples, you can see that there are lots of options for creating iSCSI storage systems. You can actually spend an afternoon designing iSCSI systems, determining prices, resiliency, and performance and still not scratch the surface of the options. So you can see that using iSCSI offers a tremendous amount of flexibility for providing cluster storage.

AoE

A close cousin to iSCSI and HyperSCSI is AOE (ATA over Ethernet). It was developed by CoRaid for creating and deploying inexpensive SAN systems using ATA or SATA drives and Ethernet. Like HyperSCSI, AoE uses its own packet that sits on top of the Ethernet layer. And like iSCSI and HyperSCSI it encapsulates hard drive commands and transmits them over an Ethernet network. But the specification for AoE is only 8 pages long and the specification for iSCSI is 257 pages. The difference is because AoE uses ATA commands and not SCSI commands.

AoE was developed quite quickly using a simple design. An AoE driver was added to the Linux kernel very rapidly. In this drive for simplicity, it was decided to use a different packet design than TCP. This improves performance, but it makes the packets unroutable over WANs. But since most SANs don't use WANs, then this isn't too much of a problem. More over clusters don't use SANs so this is not an issue.

AoE is very unique in that it uses a MAC Address to identify both the source and destination of the packets. Remember that AoE uses packets defined just above the Ethernet layer. So this means that the MAC address approach can only work inside a single Ethernet broadcast domain. It also means that the checks of TCP can't be used. So AoE has had to develop its own flow control techniques. It uses cyclic redundancy checks to ensure that the packets arrive intact.

CoRaid has written drivers for AoE for a variety of platforms. They have drivers for Linux (part of the 2.6 Linux kernel), FreeBSD, Mac OS X, Solaris, and God help us all, Windows. So it's pretty easy to use AoE with a variety of operating systems.

CoRaid has developed a set of products that use AoE to provide block devices to nodes in a very similar manner to iSCSI. They have a 3U rack enclosure that has up to 15 slots for SATA I or SATA II drives. It also has 2 GigE connections to connect the storage to the cluster network. It has a RAID controller inside the unit for the drives and has redundant hot-swap power supplies. With the two GigE links, the aggregate performance of a single box is about 200 MB/s.They also have other form factors including a 1U with 4 drives and a tower with 15 drive slots. There is a fairly new box that has a 10GigE link in or 6 GigE links with performance of about 500 MB/s. They also have a 4U device that has 15 drive slots, but also has a small NAS/CIFS server built in to make a simple NAS/CIFS box.

dCache

dCache is an open-source project for a file system to store and retrieve large amounts of data. In a sense it's somewhat similar to AFS, but it is focus is different. The focus of dCache is to store huge amounts of data, such as the data from a physics experiment, on servers that are distributed throughout a cluster or a Grid. It is not necessarily focused on high-speed parallel access to the data, but rather, it is focused on storing large amounts of data efficiently and quickly, and giving access to the data through typical methods (e.g. POSIX commands).

dCache is a virtual file system that allows the files to be accessed using most of the typical access methods. It splits the name space of the file system and where the data is actually located. The name space is managed by a database, usually PostgreSQL. If an application or a client requests access to the data, then the response, the data, is handled by the nfs2 protocol. It can also respond using various ftp filename operations. Similar to AFS, dCache allows the files to exist on any of the various dCache servers, which are referred to as pools. dCache also has the capability of accessing data of a Tertiary Storage Manager which could be used with a HSM system. dCache handles all necessary data transfer between the servers and optionally between the external storage manager and the cache itself.

dCache has something called, dCAP, that is a native protocol that allows regular file access functionality. dCache also includes a C language client implementation of the POSIX functions >open, read, write, seek, stat, and close, as well as standard file system name space operations (available for Solaris, Linux, Irix64, and Windows). This library can be linked against client applications or it may be preloaded to over-ride the file system IO. The library also supports pluggable security modules. Currently, dCache comes with GssApi which is a Kerberos module, and ssl.

The library also performs all actions to survive a network or pool failures. Given that the name space and the data are decoupled, this task is much easier than it could have been. dCache also keeps several copies around in the pools, so this also helps in surviving a data server crash or a network crash.

As mentioned before, dCache can keep several copies of a data in the file system. This capability is part of a several data management capabilities in dCache. For example, dCache allows you to define where you might like to put data among the pools. Normally, dCache distributes the data around the pools in a load-balancing sense. But if you have a preference about where you want certain files you can specify that. As an example, you may want certain types of data or certain groups of data files to be closer to a group working on them. Another way to use what is termed a pool attraction model is to use the hard drives in dCache to accept data from an experiment and then move into the Tertiary Storage System (tape).

Coupled with the pool attraction model is the idea of load balancing. This module monitors file system metrics such as, the number of active data transfers and the age of the least recently used file for each pool. With this information, it can select the most appropriate pool. It can even decide when and if to replicate a file.

The Replica Manager Module within dCache makes sure there are at least N copies of a file distributed over different pool nodes. It also makes sure there are no more than M copies. In addition to making data access quicker, the Replica Manager can also help in data configuration if you need to shut down storage servers without affecting the system availability or to overcome node or disk failures.

So far dCache has mostly found acceptance in the high-energy physics community. dCache is in use at FNAL, DESY, Brookhaven, CERN, SDSC, and Fermi Labs, to name but a few. These labs all handle tremendous amounts of data from science experiments and dCache was developed by these labs to allow the efficient migration of data from these experiments to the physicists that need to examine the data. Currently, dCache has been stressed up to several hundred Terabytes and several hundred data servers.

Parallel File Systems

"Parallel File Systems - what do they look like?." OK, this isn't Real Genius but parallel file systems are a hotly discussed technology for clusters. They can provide high amounts of IO for clusters -- if that's what you truly need. I'm constantly surprised at how many people don't know how IO influences the performance of their codes. They can also provide a centralized file system for your clusters and in many cases you can link parallel file systems from geographically distinct sites so you can access data from other sites as though it was local. Centralized file systems can also ease a management burden and also improve the scalability of your cluster storage. You can also use them for diskless compute nodes by providing a reasonable high performance file system for storage and scratch space.

Parallel File Systems are distinguished from Distributed File Systems because the clients contact multiple storage devices instead of a single device or a gateway. An easy explanation of the difference is that with Clustered NAS devices, the client contacts a single gateway. This gateway contacts the various storage devices and assembles the data to be sent back to the client. A Parallel File System allows the client to directly contact the storage devices to get the data. This can greatly improve the performance by allowing parallel access to the data, using more spindles, and possible moving the metadata manager out of the middle of every file transaction.

There are lots of ways a parallel file system can be implemented. I'm going to categorize the parallel file systems into two groups. The first group uses more traditional methods in the file system such as block based or even file based schemes. And, being traditional is not a bad thing by any stretch. The second group is the next generation in parallel file systems -- object based file systems. We'll start the discussion of parallel file systems by covering the traditional approach then move on to object based systems. The first traditional parallel file system we'll cover is perhaps the oldest and arguably the most mature parallel file system, GPFS.

GPFS

Until recently, GPFS (General Purpose File System) was only available only on AIX systems, but several years ago IBM ported it to Linux systems. Initially it was available only on IBM hardware, but in about 2005 IBM made GPFS available for OEMs so that it's now available for non-IBM hardware. The only OEM I'm aware of that used it was Linux Networx.

GPFS is a high-speed, parallel, distributed file system. In typical HPC deployments it is configured similarly to other parallel file systems. There are nodes within the cluster that are designated as IO nodes. These IO nodes have some type of storage attached to them whether it is direct attached storage (DAS) or some type of SAN (Storage Area Network) storage. (Note: There are many variations on this theme where you can combine various types of storage.). Then the file system is mounted by the clients and any IO allows the clients to contact the IO nodes, typically using a special driver, for high performance access to the data.

GPFS achieves high-performance by striping data across multiple disks on multiple storage devices. As data is written to GPFS, it stripes successive blocks of each file across successive disks. But it makes no assumptions about the striping pattern. There are 3 striping patterns it uses:

Round Robin: A block is written to each LUN in the file system before the first LUN is written to again. The initial order of LUNs is random, but that same order is used for subsequent blocks.

Random: A block is written to a random LUN using a uniform random distribution with the exception that no two replicas can be in the same failure group.

Balanced Random: A block is written to a random LUN using a weighted random distribution. The weighting comes from the size of the LUN.

To further improve performance, GPFS uses client-side caching and deep prefetching such as read-ahead and write-behind. It recognizes standard access patterns such as sequential, reverse sequential, and random and will optimize IO accesses for these particular patterns. Furthermore GPFS can read or write large blocks of data in a single IO operation.

The amount of time GPFS has been in production has allowed it to develop several more features that can be used to help improve performance. When the file system is created you can chose the blocksize. Currently, 16KB, 64KB, 512KB, 1MB, and 2MB block sizes are supported with 256KB being the most common. Using large block sizes helps improve performance when large data accesses are common. Small block sizes are used when small data accesses are common. But unfortunately, you cannot change the blocksize once a file system has been built without erasing the data.

To help with smaller file sizes, GPFS can subdivide the blocks into 32 sub-blocks. A block is the largest chunk of contiguous data that can be accessed. A sub-block is the smallest chunk of contiguous data that can be accessed. Sub-blocks are useful for files that are smaller than a block and are stored using the sub-blocks. This can help the performance of applications that use lots of small data files (e.g. Life Sciences applications). More over, this is very useful for file systems that have very large block sizes but still have to store some small files.

To create resiliency in the file system, GPFS uses distributed metadata so that there is no single point of failure nor a performance bottleneck. To get HA (High Availability) capabilities, GPFS can be configured using logging and replication. GPFS will log (journal) the metadata of the file system so in the event of a disk failure, it can be replayed so that the file system can be brought to a consistent state. Replication can be done on both the data and the metadata to provide even more redundancy at the expensive of less usable capacity. GPFS can also be configured for fail-over both at a disk level and at a server level. This means that if you lose a node GPFS will not lose access to data nor degrade performance unacceptably. As part of this it can transparently fail-over lock servers and other core GPFS functions, so that the system stays up and performance is at an acceptable level.

As mentioned earlier GPFS has been in use probably longer than any other parallel file system. In the Linux world, there are GPFS clusters with over 2,400 nodes (clients). One aspect of GPFS that should be mentioned in this context is that the GPFS is priced by the node for both IO nodes and clients. So you pay for licenses whether you buy more servers or buy more clients.

In the current version (3.x) GPFS only uses TCP as the transport protocol. In version 4.x it will also have the ability to use native IB protocols (presumably SDP). If NFS protocols are required, then the GPFS IO nodes can act as NFS gateways. Typically this is done for people who want to mount the GPFS file system on their desktop, but also allows you to use GPFS as a clustered NAS file system. Note this is a fairly easy and inexpensive way to get a clustered NAS. GPFS provides a very reliable back-end to the clustered NAS and you can add gateways for increased performance. Plus you only have to pay for the licenses used for IO nodes, not the clients. Moreover, GPFS can also use CIFS for Windows machines. Of course NFS and CIFS are a lot slower than the native GPFS, but it does give people access to the file system using these protocols.

While GPFS has more features and capabilities than I have space to write about, I want to mention two other somewhat unique features. GPFS has a feature called multi-cluster. This allows two different GPFS file systems to be connected over a network. As long as gateways on both file systems can "see" each other on a network, you can access data from both file systems as though they are local. This is a great feature for groups that may be located around the world to easily share data.

The last feature I want to mention is called the GPFS Open Source Portability Layer (GPL). GPFS comes with certain GPFS kernel modules built for a basic distribution. The portability layer allows these GPFS kernel modules to communicate with the Linux kernel. While some people may think this is a way to create a bridge from the GPL kernel to a non-GPL set of kernel modules, it actually serves a very useful purpose. Suppose there is a kernel security notice and you have to build a new kernel. If you are dependent upon a vendor for a new kernel, you may have to wait a while for them to produce a new kernel for you. During this time you are vulnerable to a security flaw. With the portability layer you can quickly rebuild the new modules for the kernel and reboot the system yourself.

Rapidscale

Rapidscale is a parallel file system from that Rackable. Rapidscale was bought from what was Terrascale (actually Rackable purchased all of Terrascale). The Rapidscale approach aggregates storage nodes into a continuous file system (single name space). The resulting name space is mounted by each client node and can communicate directly with the data servers using a modified iSCSI protocol. Rapidscale's overall approach focuses on parallelization at the block device layer. In between the application and the storage devices sits the Rapidscale software that uses a slightly modified iSCSI to communicate with the storage servers. Rapidscale uses standard Linux file system tools such as md and lvm to create storage pool for the clients.

To get improved performance, Rapidscale builds the name space by aggregating the storage into a single virtual disk using the Linux tool md to create a RAID-0 stripe. Using RAID-0 allows the data to be striped across the storage servers, resulting in improved IO performance. You can format the resulting file system using mkfs that is in Linux.

Remember that Rapidscale is using md to create a RAID-0 device across all of the storage devices. When a file system is created across the devices, all accesses, whether they be data or metadata are distributed across the devices. This also means that metadata is not a single location and that metadata operations are spread across the storage servers.

Rapidscale is a 64-bit file system that requires a Linux kernel of 2.6.9 or greater. Rapidscale is scalable to 18 exabytes (18 million TBs). There are two primary components to Rapidscale: a client side piece and a storage server piece. The client side piece is a kernel module that runs on the clients and the storage server piece runs on the storage servers. The client software uses an enhanced iSCSI initiator that includes cache coherency (more on that in a bit).

The storage server pieces take on the role of an iSCSI target. Rapidscale uses a modified iSCSI target to add all of the functionality necessary to handle all of the simultaneous data and metadata requests from the clients. It also makes sure that cache coherency is maintained on the client nodes and prioritizes and manages metadata operations to ensure that the file system is responsive (no long delays) and that it is constant. This is handled by keeping the order of the operation requests from the clients.

Any change in to a given block with the name space made by any client will be available on demand using the Rapidscale cache coherence mechanism. Remember that Rapidscale is distributed so that means that the file system cache, which is in memory, is now distributed as well. To make sure that each client receives the contents of a data block as it was when the request was made, Rapidscale has added what is called a cache invalidation protocol. This protocol allows the block layer of the file system to maintain a local cache of any data blocks that are being used through the block layer interface. The Rapidscale client software operates synchronously. This means that the local client cache is a write through cache (i.e. as soon as an application writes, the client sends the blocks to the storage servers). But if a client has a read request, the local cache from the storage pool is always invalidated. This ensures the client is getting the latest data on the blocks requested.

To create a global file system, Rapidscale has taken a simple local file system and modified it slightly. The block device layer has been modified to force a re-validation of all block reads, ensuring that the reads get the current data. The block device layer has also been modified to provide a mechanism for maintaining consistency of the local file system control structures with their images on the storage server. When Rapidscale was Terrascale, it used ext2. Now, Rapidscale uses XFS, which Rackable calls zXFS.

Locking is also a very important part of a parallel file system. With a local file system, like XFS, ext3, reiserFS, etc., the locking is handled by local memory based data structures. These locks ensure that if one client (application) is writing to a block, that another client (application) that wants to read that block waits until the write operation is finished. This is also true for write operations that are waiting on a read operation to finish. But remember that Rapidscale is a distributed global file system and not a local file system on your desktop. Rapidscale handles locking by extending the local cache invalidation to the maintenance of POSIX locks across all clients. This is done similarly to what is done for the maintenance of other file system metadata information.

Fundamentally, Rapidscale uses byte range locking (as do many other file systems). It uses the Rapidscale cache invalidation protocol for locking. Any file system locks are propagated to each client using that file system. This means that if one client sets a lock, then all of the clients will also have the lock. The same is true that once the lock is removed, it is removed from all clients via the cache invalidation protocol.

Rapidscale also has the capability of being configured in a High Availability (HA) configuration. Rapidscale has three options for creating parity groups. These parity groups can tolerate the lose of an entire storage server without losing data. Rapidscale can be configured as 2+1 (two active storage servers with one stand by server), 4+1, and 8+1. If a storage server fails the a reconstruction takes place in the background using the stand-by server. The reconstruction proceeds at full disk speed.

You can also use gateways in Rapidscale to provide NFS and CIFS access. This is similar to other approaches. You just add servers to the storage network, mount the file system on the server, and then re-export the file system using NFS and/or CIFS. This creates a clustered NAS system. Since Rapidscale is a global parallel file system, you can increase the storage behind the NFS/CIFS gateways if more storage is needed. If you need more aggregate NFS/CIFS bandwidth then you can add more gateways. It's a very simple process.

Initially Rapidscale used only TCP networks, but now it can also use Myrinet networks and InfiniBand networks. This can be done between storage servers and also between clients and the storage servers. This allows Rapidscale to have a very high aggregate bandwidth. Also since, Rapidscale evenly distributes the file system across storage servers, aggregate performance increases linearly with the number of storage servers.

Performance of Rapidscale depends upon how many storage servers there are, how fast the storage is on each server, how many clients there are, and the data access pattern. But this brings up an important point -- designing or configuring a "balanced" storage system. In case you missed it, you can read about a balanced storage system in the NAS section above.

IBRIX

IBRIX offers a distributed file system that presents itself as a global name space to all the clients. The IBRIX Fusion product is a software only product that takes whatever data space you designate on what ever machines you choose and creates a global, parallel file system on them. This file system, or "name space,", can be then mounted in a normal fashion. According to IBRIX, the file systems can scale almost linearly with the number of data servers (also called IO servers or segment servers). It can scale up to 16 Petabytes (a Petabyte is about 1,000 Terabytes or about 1,000,000 Gigabytes). It can also achieve IO (Input/Output) speeds of up to 1 Terabyte/s (TB/s).

The IBRIX file system is composed of "segments." A segment is a repository for files and directories. A segment does not have to be a specific part of a directory tree and the segments don't have to be the same size. You can also split a directory across segments. This allows the segments to be organized however the file system needs them or according to policies set by the administrator or for performance. The file system can place files and directories in the segments irrespective of their locations within in the space. For example, a directory could be on one segment while the files within the directory could be spread across several segments. In fact, files can span multiple segments. This improves throughput because multiple segments can be accessed in parallel. The specifics of where and how the files and directories are placed occurs dynamically when the files or directories are created based on an allocation policy. This allocation policy is set by the administrator based on what they think the access patterns will be and any other criteria that will influence its function (e.g. file size, performance, ease of management). IBRIX Fusion also has a built-in Logical Volume Manager (LVM) and a segmented lock manager (for shared files).

The segments are managed by segment servers with each segment only having one server. However segment servers can manage more than one segment. Currently, IBRIX uses TCP or UDP as the communication protocol for the file system.

The clients mount the IBRIX file system through one of 3 ways: (1) using the IBRIX driver, (2) NFS, (3) or CIFS. Naturally the IBRIX driver knows about the segmented architecture and will take advantage of it to improve performance by routing data requests directly to the segment server(s) containing the data or metadata needed. The IBRIX driver is just a kernel module that requires no patches to the kernel itself. There is a version of the IBRIX driver for Linux and Windows. It can also accommodate mixed 64-bit and 32-bit environments (an advantage when uniting old and new clusters with a single file system).

If you use the NFS or CIFS protocol the client mounts the file system from one of the segment servers which acts as a gateway to the file system. You will most likely have more than one segment server so this allows you to load balance NFS or CIFS. As with other parallel file systems, this allows IBRIX to behave as an infinitely scalable clustered NAS.

The segmented file system approach has some unique properties. For example the ownership of a segment can be migrated from one server to another while the file system is being actively used. You can also add segment servers while the file system is functioning, allowing segments to be spread out to improve performance (if you are using the IBRIX driver). In addition to adding segment servers to improve performance, you can add capacity by adding segments to current segment servers. Since IBRIX doesn't have a centralized lock manager or centralized metadata manager, when you add segment servers you automatically get better lock performance and metadata performance. This can improve the IOPS (IO Operations per Second) of the file system.

One feature of IBRIX that many people overlook is the ability to split a directory across more than one segment server. All parallel and distributed file systems typically have problems with small files and with directories that have lots of files in them (you would be surprised at how many organizations use benchmarks that produce thousands or even of millions of files in a single directory). With IBRIX, since you can split a directory across various segment servers, it can handle a much higher file creation rate, deletion rate, access rate, etc. for directories with huge amounts of files. For instance, Bioinformatic applications can produce huge number of files in a single directory. So IBRIX has a distinct advantage in these situations.

The segmented approach as implemented in IBRIX Fusion has some resiliency features. For example, parts of the name space can stay active even though one or more segments might fail. To gain additional resiliency you can configure the segment servers with fail-over (High Availability - HA). IBRIX has a product called IBRIX Fusion HA, that allows you can also configure the the segment servers to fail over to a stand-by server. These servers can be configured in an active-active configuration so that both servers are being used rather than have one server in a standby mode where it's not being used.

IBRIX Fusion also features a snap shot tool called IBRIX FusionSnap. It allows snap shots of the file system to be taken and used for backups. You can also use it for recovering accidentally deleted files since you can store the snapshot and then recover the deleted files from it.

One other significant feature that IBRIX Fusion has is something called IBRIX FusionFileReplication. This feature allows administrators to set policies about creating replicas (copies) of files. The replicas are self-healing which means that if one of the replicas is damaged, it can be repaired by the other replicas. Also, Fusion makes sure that the replicas are distributed across the segment servers to avoid the replicas from being on the same storage device or segment server. Presumably, the replicas can also be used to share data access. For example, if several clients want to read the same file, then Fusion splits the IO across the replicas.

Recently, IBRIX and Mellanox announced a cluster NAS product that uses the IBRIX file system FusionFS and the Mellanox NFS/RDMA package. This is the first clustered NAS product to use native IB protocols from the client to the NFS gateways. This new product uses FusionFS as the backend storage for the clustered NAS allowing an extremely large single name space. Then NFS gateways are added to the file system that use the NFS/RDMA SDK. This means that the clients access the IBRIX file system using IB links, greatly increasing the bandwidth. All other clustered NAS systems use TCP links to the backend file system which limits their performance to each client (in the case of GigE, this means a maximum of about 100 MB/s). The IBRIX/Mellanox combination should allow a client to have a read bandwidth of about 1,400 MB/s and a write bandwidth of about 400 MB/s to a single NFS gateway. Don't forget that you can also add more NFS gateways to the system and load balance the clients across the gateways. This means you know have a clustered NAS product with a file system that can scale to Petabytes, and gateways that can use native IB protocols for very high speed single client access and you can add as many gateways as you want!

GlusterFS

GlusterFS is a parallel distributed file system has been designed to scale to several Petabytes and is licensed with the GNU open-source license. The fundamental design philosophies of GlusterFS is performance (speed) and flexibility. One of the choices the designers made was to make GlusterFS what is sometimes termed a virtual file system. In many ways it is like PVFS. It stores data in files within a conventional file system such as ext3, xfs, etc., on the storage devices. But the design of GlusterFS goes even further and does not store metadata. This allows the file system to gain even more speed because no central, or even distributed, metadata servers are in the middle of the data transaction (between the clients and the data servers). Instead it relies on the metadata of the local file system in the IO nodes. This is true for distributed file systems or for a parallel file system (more on that later).Fundamentally GlusterFS is a distributed, network based parallel file system. It can use either TCP/IP or InfiniBand RDMA interconnects for transferring data. At the core of GlusterFS is what are called "translators." Translators are modules that define how the data is accessed and stored on the storage devices. The design of translators also allow you to "chain" or stack translators together to combine features to achieve the behavior that you want. The design of the file system, the use of translators, and the ability to stack them probably makes GlusterFS the most powerful and flexible file system available.

The fundamental storage unit for GlusterFS is called a "storage brick". Basically a storage brick is a storage node with some storage that makes a file system available for storing data. Fundamentally, you take a node within the cluster, and format a file system on a volume. Then GlusterFS can use this file system as directed by the configuration. This design allows you to either use every node in the cluster that has disks in it, including compute nodes, as storage bricks. Or you can use dedicated storage nodes that have a number of disks in them with RAID controllers.

As with most network file systems, GlusterFS has two parts, a client and a server. The GlusterFS Server, typically run on the storage bricks, allows you to export volumes over the network. The GlusterFS client mounts the exported volumes in a fashion dictated by the translators.

The concept of "translators" is very powerful because it allows you to take the exported volumes and mount them on a client in a very specific manner that you specify. You can have a specific set of clients mount specific volumes, creating something like a SAN. Or you can specify that the clients should mount the volumes to create a clustered, parallel, distributed file system. You can also control how the data is written to the volumes to include mirroring and striping. The translators can be broken into various families based on functionality:

- Performance translators

- Clustering translators including what are called scheduling translators that describe how the data is to be scheduled to the storage

- Debugging translators

- "Extra feature" translators

- Storage translators (currently only POSIX)

- Protocol translators (server or client)

- Encryption translators

Here is the list of current translators available for GlusterFS.

- Performance Translators

- Read ahead Translator

- Write behind Translator

- Threaded IO Translator

- IO-Cache translator

- Stat Pre-fetch Translator

- Clustering Translators

- Automatic File Replication (AFR) Translator

- Stripe Translator

- Unify Translator

- Schedulers:

- ALU (Adaptive Least Usage) Scheduler

- NUFA (Non-Uniform File System Access) Scheduler

- Random Scheduler

- Round-robin Scheduler

- Debug Translators

- trace

- Extra Feature Translators

- Filter

- Posix-locks

- Trash

- Fixed-id

- Storage Translators

- POSIX

- Protocol Translators

- Server

- Client

- Encryption Translators

- rot-13

One of the initial design goals in GlusterFS was to avoid striping. While this may sound funny - typically striping is used to increase IO performance - the designers of GlusterFS felt that striping was not a good idea. If you look at the GlusterFS FAQ you will see a discussion about why striping was not initially included. According to the GlusterFS developers, the 3 reasons they think that striping is bad are:

- Increased Overhead (disk contention and performance decreases as load increases)

- Increased Complexity (increased file system complexity)

- Increased Risk (Lose of a single node means loss of an entire file system)

- Implementing the strips translator was easy (couple of days work).

- There are a few companies that want striping features in a file system as their applications use a single large file.

- If the file is big, a single server can get overloaded when the file is accessed. Striping can help spread the load.

- If you use the cluster/afr translator with cluster/stripe GlusterFS can provide high availability.

One of the most interesting features of GlusterFS is the fact that it doesn't use any metadata for the file system. Instead it relies solely on the metadata of the underlying file system. So how does it keep track of where files are located if the files are striped are if the files are written to various storage bricks (i.e. using the afr module. I'm glad you asked that.

In the case of a clustered file system that also has the round-robin scheduler, when the client starts to write a file to the file system, GlusterFS creates a sub-directory with that file name. Then the current IO server is sent the first part of the file and the second server is sent the second part and so on. Each file part is written to the sub-directory. When you include AFR (automatic file replication), which is highly recommended, you have copies of the file parts on other servers. That way if an IO server goes you have a copy of the file on another server and AFR makes a copy of the missing piece and puts it in the proper location. Since the file system knows which was the first server for the file because of the sub-directory, it can recover any missing piece of the file. It seems somewhat complicated, but it definitely eliminates the need for a metadata manager.

GlusterFS is a new file system which means it is still maturing. But the developers and designers of it have thought out of the box to come up with a very interesting and very, very promising file system. It is very flexible and some ingenuous ideas about how to make a high performance file system while still keeping the resiliency in the file system.

EMC Highroad (MPFSi)